Open RObot COntrol Software

1.12.1

Copyright © 2002,2003,2004,2005,2006,2007 Peter Soetens, FMTC

Orocos Real-Time Toolkit Version 1.12.1.

Permission is granted to copy, distribute and/or modify this document under the terms of the GNU Free Documentation License, Version 1.1 or any later version published by the Free Software Foundation, with no Invariant Sections, with no Front-Cover Texts, and with no Back-Cover Texts. A copy of this license can be found at http://www.fsf.org/copyleft/fdl.html.

Abstract

This document gives an introduction to building your own components for the Orocos ( Open RObot COntrol Software ) project.

Table of Contents

- 1. How to Read this Manual

- 2. Setting up the Component Interface

- 1. Introduction

- 2. Hello World !

- 3. Setting Up a Basic Component

- 3.1. Task Application Code

- 3.2. Starting a Component

- 3.3. A TaskContext's Error and Active states

- 3.4. A TaskContext's Run-Time Errors

- 3.5. Error States Example

- 3.6. Interfacing the TaskContext

- 3.7. Introducing the TaskContext's Interface

- 3.8. The Data Flow Interface

- 3.9. The Method Interface

- 3.10. Method Argument and Return Types

- 3.11. The Attributes and Properties Interface

- 3.12. The Command Interface

- 3.13. The Event Interface

- 4. Connecting TaskContexts

- 5. Using Tasks

- 6. Deploying Components

- 7. Advanced Techniques

- 3. Orocos Scripting Reference

- 4. Distributing Orocos Components with CORBA

- 5. Core Library Reference

- 6. OS Abstraction Reference

- 7. Hardware Device Interfaces

List of Figures

- 1.1. Orocos as Middleware

- 1.2. Real-Time Toolkit Layers

- 2.1. Components Run in Threads

- 2.2. Schematic Overview of a TaskContext

- 2.3. Schematic Overview of the Hello Component.

- 2.4. Schematic Overview of a TaskContext

- 2.5. TaskContext State Diagram

- 2.6. Executing a TaskContext

- 2.7. Extended TaskContext State Diagram

- 2.8. Possible Run-Time failure states.

- 2.9. TaskContext Interface

- 2.10. Component Deployment Levels

- 2.11. Example Component Deployment.

- 3.1. State Change Semantics in Reactive Mode

- 3.2. State Change Semantics in Automatic Mode

- 5.1. Tasks Sending Commands

- 5.2. Event Handling

- 5.3. DataObjects versus Buffers

- 6.1. OS Interface overview

- 7.1. Device Interface Overview

List of Tables

List of Examples

- 2.1. TaskContext Data Flow Topology Example

- 2.2. TaskContext Peer Disconnection Example

- 3.1. string and array creation

- 3.2. StateMachine Definition Format

- 3.3. StateMachine Example (state.osd)

- 3.4. Program example (program.ops)

- 5.1. Example Periodic Thread Interaction

- 5.2. Using Events

- 5.3. Event Types

- 5.4. Creating attributes

- 5.5. Using properties

- 5.6. Accessing a Buffer

- 5.7. Accessing a DataObject

- 5.8. Using the Logger class

- 6.1. Locking a Mutex

- 7.1. Using the name service

Table of Contents

This manual is for Software developers who wish to write their own software components using the Orocos Real-Time Toolkit. There is also a CoreLib Reference Chapter at the end to find out the precise semantics of our communication primitives and other important classes. The Orocos hardware abstraction is included as well. The HTML version of this manual links to the API documentation of all classes.

The most important Chapters to get started building a component are presented first. Orocos components are implemented using the 'TaskContext' class and the following Chapter explains step by step how to define the interface of your component, such that you can interact with your component from a user interface or other component.

For implementing algorithms within your component, various C++ function hooks are present in wich you can place custom C++ code. As your component's functionality grows, you can extend its scripting interface and call your algorithms from a script.

The Orocos Scripting Chapter details how to write programs and state machines. "Advanced Users" may benefit from this Chapter as well since the scripting language allows to 'program' components without recompiling the source.

The Real-Time Toolkit allows setup, distribution and the building of real-time software components. It is sometimes refered to as 'middleware' because it sits between the application and the Operating System. It takes care of the real-time communication and execution of software components.

Orocos provides a limited set of components for application development. The Orocos Component Library (OCL) is a collection of components ranging from general purpose to hardware specific components. They serve as an example, although some are using complex constructs of the Real-Time Toolkit. Two noteworthy components are the TaskBrowser and the DeploymentComponent. The former provides a console which allows you to manipulate remote or in-process components and lets you browse the component network. The latter is a component which can load other components into the same process. For beginners, the TaskBrowser is used to instruct the DeploymentComponent to load, connect and configure a given list of components, but for matured applications, the DeploymentComponent is given an XML file which describes which components to load, connect and start in the current application.

The components of the Orocos Component Library are documented seperately on the OCL webpage.

The Real-Time Toolkit is structured in layers on top of the Operating System and the devices (IO).

An Orocos component is built upon the Real-Time Toolkit (RTT) library. It allows you to build components which are accessible over a network, configurable using XML files and listen to a scripting interface, which allows components to be controlled using text commands. A component which accesses IO devices can use the Orocos Device Interface as well which defines how to interact with analog and digital IO and encoders. Of course, components can make use of external, non-Orocos libraries as well.

Orocos components which only use the Real-Time Toolkit are portable over different Operating Systems (OS) and processor architectures. Orocos has an internal OS abstraction which allows the components to run on any supported architecture. When your component uses an external library, for example a camera or vision library, portability depends on these libraries.

Table of Contents

- 1. Introduction

- 2. Hello World !

- 3. Setting Up a Basic Component

- 3.1. Task Application Code

- 3.2. Starting a Component

- 3.3. A TaskContext's Error and Active states

- 3.4. A TaskContext's Run-Time Errors

- 3.5. Error States Example

- 3.6. Interfacing the TaskContext

- 3.7. Introducing the TaskContext's Interface

- 3.8. The Data Flow Interface

- 3.9. The Method Interface

- 3.10. Method Argument and Return Types

- 3.11. The Attributes and Properties Interface

- 3.12. The Command Interface

- 3.13. The Event Interface

- 4. Connecting TaskContexts

- 5. Using Tasks

- 6. Deploying Components

- 7. Advanced Techniques

Abstract

This document describes the Orocos Component Model, which allows to design Real-Time software components which transparently communicate with each other.

This manual documents how multi-threaded components can be defined in Orocos such that they form a thread-safe robotics/machine control application. Each control component is defined as a "TaskContext", which defines the environment or "context" in which an application specific task is executed. The context is described by the five Orocos primitives: Event, Property, Command, Method and Data Port. This document defines how a user can write his own task context and how it can be used in an application.

Figure 2.1. Components Run in Threads

Components run in (periodic) threads and can communicate transparently. The Orocos RTT does a 'best effort' to deliver the highest performance to the highest priority threads.

A component is a basic unit of functionality which executes one or more (real-time) programs in a single thread. The program can vary from a mere C function over a real-time program script to a real-time hierarchical state machine. The focus is completely on thread-safe time determinism. Meaning, that the system is free of priority-inversions, and all operations are lock-free (also data sharing and other forms of communication such as events and commands). Real-time components can communicate with non real-time components (and vice verse) transparently.

| Note | |

|---|---|

In this manual, the words task and component are used as equal words, meaning a software component built using the C++ TaskContext class. |

The Orocos Component Model enables :

Lock free, thread-safe, inter-thread function calls.

Communication between hard Real-Time and non Real-Time threads.

Deterministic execution time during communication for the higher priority thread.

Synchronous and asynchronous communication between threads.

Interfaces for component distribution.

C++ class implementations for all the above.

This chapter relates to other chapters as such :

- Core Library

provides the Command and Event infrastructure, activity to thread mapping, Properties and lock-free data exchange implementations.

- Execution Engine

executes the commands, events, real-time programs and scripts in a component.

- Orocos Scripting

provides a real-time scripting language which is convertible to a form which can be accepted by the Execution Engine.

The Scripting chapter gives more details about script syntax for state machines and programs.

Figure 2.2. Schematic Overview of a TaskContext

The Execution Flow is formed by Programs and State Machines sending commands, events,... to Peer Tasks. The Data Flow is the propagation of data from one task to another, where one producer can have multiple consumers and vice verse.

A component's interface consists of : Attributes and Properties, Commands, Methods, Events and Data Flow ports which are all public. The class TaskContext groups all these interfaces and serves as the basic building block of applications. A component developer 'builds' these interfaces using the instructions found in this manual.

This section introduces tasks through the "hello world" application, which is included in the Orocos Component Library. It contains one TaskContext component, HelloWorld, which has one instance of each communication primitive.

The way we interact with TaskContexts during development of an Orocos application is through the Task Browser. The TaskBrowser is a powerful console tool which helps you to explore, execute and debug TaskContexts in running programs. All you have to do is to create a TaskBrowser and call its loop() method. When the program is started from a console, the TaskBrowser takes over user input and output. The TaskBrowser uses the GNU readline library to easily enter commands to the tasks in your system. This means you can press TAB to complete your commands or press the up arrow to scroll through previous commands.

| Note | |

|---|---|

The TaskBrowser is a component of its own which is found in the Orocos Component Library (OCL). |

#include <ocl/TaskBrowser.hpp>

#include <rtt/os/main.h>

// ...

using namespace Orocos;

int ORO_main( int, char** )

{

// Create your tasks

TaskContext* task = ...

// when all is setup :

TaskBrowser tbrowser( task );

tbrowser.loop();

return 0;

}

Now let's start the helloworld application. If you downloaded OCL and compiled it from source, You can do this by entering the helloworld subdirectory of your OCL build directory and running ./helloworld

In case you got OCL as a binary package, enter loadComponent("Hello","orocos-helloworld") at the prompt of the deployer application for your target: ORO_LOGLEVEL=5 deployer-gnulinux for example. This command loads the Orocos-HelloWorld component library and creates a component with name "Hello" (Requires OCL 1.4.1 or later). Finally, type cd Hello to start with the exercise.

0.016 [ Info ][main()] ./helloworld manually raises LogLevel to 'Info' (5). See also file 'orocos.log'.

0.017 [ Info ][main()] **** Creating the 'Hello' component ****

0.018 [ Info ][ConnectionC] Creating Asyn connection to the_event.

0.018 [ Info ][ExecutionEngine::setActivity] Hello is periodic.

0.019 [ Info ][main()] **** Starting the 'Hello' component ****

0.019 [ Info ][main()] **** Using the 'Hello' component ****

0.019 [ Info ][main()] **** Reading a Property: ****

0.019 [ Info ][main()] the_property = Hello World

0.019 [ Info ][main()] **** Sending a Command: ****

0.020 [ Info ][main()] Sending the_command : 1

0.020 [ Info ][main()] **** Calling a Method: ****

0.020 [ Info ][main()] Calling the_Method : Hello World

0.020 [ Info ][main()] **** Emitting an Event: ****

0.021 [ Info ][main()] **** Starting the TaskBrowser ****

0.021 [ Info ][TaskBrowser] Creating a BufferConnection from the_buffer_port to the_buffer_port with size 13

0.021 [ Info ][TaskBrowser] Connected Port the_buffer_port to peer Task Hello.

0.022 [ Info ][Hello] Creating a DataConnection from the_data_port to the_data_port

0.022 [ Info ][Hello] Connected Port the_data_port to peer Task TaskBrowser.

Switched to : Hello

0.023 [ Info ][main()] Entering Task Hello

0.023 [ Info ][Hello] Hello Command: World

0.023 [ Info ][Hello] Receiving Event: Hello World

This console reader allows you to browse and manipulate TaskContexts.

You can type in a command, event, method, expression or change variables.

(type 'help' for instructions)

TAB completion and HISTORY is available ('bash' like)

In Task Hello. (Status of last Command : none )

(type 'ls' for context info) :

The first [ Info ] lines are printed by the Orocos Logger, which has been configured to display informative messages to console. Normally, only warnings or worse are displayed by Orocos. You can always watch the log file 'orocos.log' in the same directory to see all messages. After the [Log Level], the [Origin] of the message is printed, and finally the message itself. These messages leave a trace of what was going on in the main() function before the prompt appeared.

Depending on what you type, the TaskBrowser will act differently. The built-in commands cd, help, quit and ls are seen as commands to the TaskBrowser itself, if you typed something else, it tries to evaluate your command to an expression and will print the result to the console. If you did not type an expression, it tries to parse it as a command to a (peer) task. If that also fails, it means you made a typo and it prints the syntax error to console.

In Task Hello. (Status of last Command : none ) (type 'ls' for context info) :1+1 Got :1+1 = 2

To display the contents of the current task, type ls, and switch to one of the listed peers with cd, while cd .. takes you one peer back in history. Since there are no peers other than the TaskBrowser itself, one can not cd anywhere in this example.

In Task Hello. (Status of last Command : none ) (type 'ls' for context info) :ls Listing Hello : Attributes : (Attribute) string the_attribute = Hello World (Attribute) string the_constant = Hello World (Property ) string the_property = Hello World (Hello World Description) Interface : Methods : the_method isRunning start stop trigger update Commands : the_command Events : the_event Objects : this ( ) the_data_port ( A Task Object. ) the_buffer_port ( A Task Object. ) Ports : the_data_port the_bufferPort Peers : TaskBrowser

| Note | |

|---|---|

To get a quick overview of the commands, type help. |

First you get a list of the Properties and Attributes (alphabetical) of the current component. Properties are meant for configuration and can be written to disk. Attributes are solely for run-time values. Each of them can be changed (except constants.)

Next, the interface of this component is listed: One method is present the_method, one command the_command and one event the_event. They all print a 'Hello World' string when invoked.

In the example, the current task has only three objects: this, the_data_port and the_buffer_port. The this object serves as the public interface of the Hello component. These objects contain methods, commands or events. The the_data_port and the_buffer_port objects are created to represent the data ports of the Hello component. They allow you to to send or receive data to these ports and check if they are connected.

Last, the peers are shown, that is, the components which are known, and may be used, by this component. The HelloWorld component is a stand-alone component and has only the TaskBrowser as a peer.

To get a list of the Task's interface, you can always type an object name, for example this.

In Task Hello. (Status of last Command : none ) (type 'ls' for context info) : this Got :this Printing Interface of 'Hello' : Command : bool the_command( string the_arg ) Hello Command Description the_arg : Use 'World' as argument to make the command succeed. Method : string the_method( ) Hello Method Description Event : void the_Event( string the_data ) Hello Event Description the_data : The data of this event.

Now we get more details about the commands, methods and events registered in the public interface. We see now that the the_command command takes one argument as a string, or that the the_method method returns a string. One can invoke each one of them.

In Task Hello. (Status of last Command : none ) (type 'ls' for context info) :the_method() Got :the_method() = Hello World

Methods are called directly and the TaskBrowser prints the result. The return value of the_method() was a string, which is "Hello World". This works just like calling a 'C' function.

When a command is entered, it is sent to the Hello component, which will execute it in its own threadon behalf of the sender. The different stages of its lifetime are displayed by the prompt. Hitting enter will refresh the status line:

In Task Hello. (Status of last Command : none ) (type 'ls' for context info) :the_command("World") Got :the_command("World") In Task Hello. (Status of last Command : queued) 1021.835 [ Info ][Hello] Hello Command: World (type 'ls' for context info) : 1259.900 [ Info ][main()] Checking Command: World In Task Hello. (Status of last Command : done ) (type 'ls' for context info) :

A Command might be rejected (return false) in case it received invalid arguments:

In Task Hello. (Status of last Command : done ) (type 'ls' for context info) :the_command("Belgium") Got :the_command("Belgium") In Task Hello. (Status of last Command : queued ) (type 'ls' for context info) : 1364.505 [ Info ][Hello] Hello Command: Belgium In Task Hello. (Status of last Command : fail ) (type 'ls' for context info) :

Besides sending commands to tasks, you can alter the attributes of any task, program or state machine. The TaskBrowser will confirm validity of the assignment with 'true' or 'false' :

In Task Hello. (Status of last Command : none ) (type 'ls' for context info) :the_attribute Got :the_attribute = Hello World In Task Hello. (Status of last Command : none ) (type 'ls' for context info) :the_attribute = "Veni Vidi Vici !" Got :the_attribute = "Veni Vidi Vici !" = true In Task Hello. (Status of last Command : none ) (type 'ls' for context info) :the_attribute Got :the_attribute = Veni Vidi Vici !

Finally, let's emit an Event. The Hello World Event requires a payload. A callback handler was registered by the component, thus when we emit it, it can react to it:

In Task Hello. (Status of last Command : none ) (type 'ls' for context info) :the_event(the_attribute) Got :the_event(the_attribute) = true In Task Hello. (Status of last Command : none ) (type 'ls' for context info) : 354.592 [ Info ][Hello] Receiving Event: Veni Vidi Vici !

The example above passed the the_attribute object as an argument to the event, and it was received by our task correctly. Events are related to commands, but allow broadcasting of data, while a command has a designated receiver.

The Data Ports can be accessed through the the_data_port and the_buffer_port object interfaces.

Initially, these ports are unconnected, as the HelloWorld component did not connect its ports to another component. Unconnected ports can hardly be used. In order to test them, you can instruct the TaskBrowser to connect to them. This is done by entering the .connect command (note the '.').

Since each port has an associated object, we can inspect the interface of a port by typing its name:

In Task Hello. (Status of last Command : none ) (type 'ls' for context info) :the_data_port Got :the_data_port Printing Interface of 'the_data_port' : Method : string Get( ) Get the current value of this Data Port Method : void Set( string const& Value ) Set the current value of this Data Port Value : The new value.

The the_data_port object has two methods: Get() and Set(). Since data ports are used for sending unbuffered data packets between components, this makes sense. One can interact with the ports as such:

In Task Hello. (Status of last Command : none ) (type 'ls' for context info) :the_data_port.Set("World") Got :the_data_port.Set("World") = (void) In Task Hello. (Status of last Command : none ) (type 'ls' for context info) :the_data_port.Get() Got :the_data_port.Get() = World

When a value is Set(), it is sent to whatever is connected to that port, when we read the port using Get(), we see that the previously set value is present. The advantage of using ports is that they are completely thread-safe for reading and writing, without requiring user code. The Hello component also contains a the_buffer_port for buffered data transfer. You are encouraged to play with that port as well.

Remember that the TaskBrowser was a component as well ? When a user enters ls, the interface of the visited component is listed. It is also possible to get an 'outside' view of the visited component, through the eyes of an external component. The leave allows a view from within the TaskBrowser itself:

In Task Hello. (Status of last Command : none ) (type 'ls' for context info) :leave 1001.607 [ Info ][main()] Watching Task Hello Watching Task Hello. (Status of last Command : none ) (type 'ls' for context info) :ls Listing TaskBrowser : Attributes : (none) Interface : Methods : isRunning start stop trigger update Commands : (none) Events : (none) Objects : this ( ) the_data_port ( A Task Object. ) the_buffer_port ( A Task Object. ) Ports : the_data_port the_buffer_port Hello Peers : TaskBrowser

The following things are noteworthy: 'ls' shows now the contents of the TaskBrowser itself and no longer of the Hello Component. In this example, the TaskBrowser has the same ports as the component it visits: the_data_port and the_buffer_port. These were created when we issued the .connect previously. Otherwise, there would be no data ports.

One can return to the 'inside' view again by typing enter:

Watching Task Hello. (Status of last Command : none ) (type 'ls' for context info) :enter 1322.653 [ Info ][main()] Entering Task Hello In Task Hello. (Status of last Command : none ) (type 'ls' for context info) :

Last but not least, hitting TAB twice, will show you a list of possible completions, such as peers or commands :

In Task Hello. (Status of last Command : none )

(type 'ls' for context info) :

the_attribute the_event cd .. quit

the_buffer_port. the_method help this.

the_command the_property leave

the_constant TaskBrowser. list

the_data_port. cd ls

(type 'ls' for context info) :

TAB completion works even across peers, such that you can type a TAB completed command to another peer than the current peer.

In order to quit the TaskBrowser, enter quit:

In Task Hello. (Status of last Command : none )

(type 'ls' for context info) :quit

1575.720 [ Info ][ExecutionEngine::setActivity] Hello is disconnected from its activity.

1575.741 [ Info ][Logger] Orocos Logging Deactivated.

The TaskBrowser Component is application independent, so that your end user-application might need a more suitable interface. However, for testing and inspecting what is happening inside your real-time programs, it is a very useful tool. The next sections show how you can add properties, commands, methods etc to a TaskContext.

| Note | |

|---|---|

If you want a more in-depth tutorial, see the 'task-intro' example for a TaskBrowser which visits a network of three TaskContexts. |

Components are implemented by the TaskContext class. It is useful speaking of a context because it defines the context in which an activity (a program) operates. It defines the interface of the component, its properties, its peer components and uses its ExecutionEngine to execute its programs and to process commands and events.

This section walks you through the definition of an example component in order to show you how you could build your own component.

| Important | |

|---|---|

The ready-to-execute code of this section can be found in the 'simple-task' RTT example on the RTT Source code page of the Orocos.org website. |

A TaskContext is constructed as :

#include <rtt/TaskContext.hpp> // we assume this is done in all the following code listings : using namespace RTT; TaskContext a_task("ATask");

The argument is the (unique) name of the component.

A task's interface consists of : Commands, Methods, Ports, Attributes and Properties and Events, which are all public. We will refer to them as members.

Figure 2.4. Schematic Overview of a TaskContext

The Execution Flow is formed by the processing of commands, methods and events (which call in turn user functions). The Data Flow is the propagation of data from one task to another, where one producer can have multiple consumers.

When a TaskContext is running, it accepts commands or events using its Execution Engine. The Execution Engine will check periodically for new commands in it's queue and execute programs which are running in the task. When a TaskContext is started, the ExecutionEngine is running. The complete state flow of a TaskContext is shown in Figure 2.5, “ TaskContext State Diagram ”.

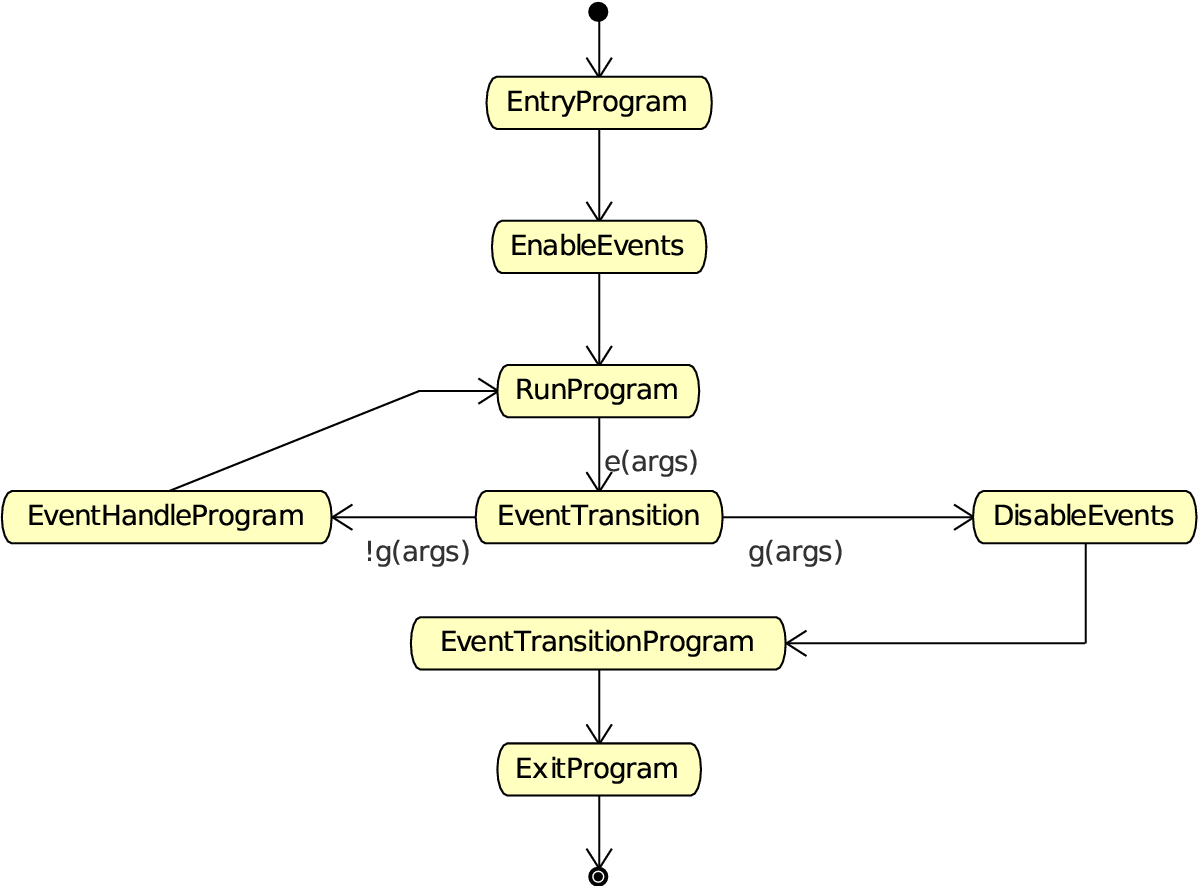

Figure 2.5. TaskContext State Diagram

During creation, a component is in the

Init state. When constructed, it

enters the PreOperational or

Stopped (default) state. If it enters

the PreOperational state after construction, it requires

an additional configure() call before

it can be start()'ed. The figure

shows that for each API function, a user 'hook' is

available.

The first section goes into detail on how to use these hooks.

The user application code is filled in by inheriting from the TaskContext and implementing the 'Hook' functions. There are five such functions which are called when a TaskContext's state changes.

The user may insert his configuration-time setup/cleanup code in the

configureHook() (read XML, print status

messages etc.) and cleanupHook() (write

XML, free resources etc.).

The run-time (or: real-time) application code belongs in the

startHook(),

updateHook() and

stopHook() functions.

class MyTask

: public TaskContext

{

public:

MyTask(std::string name)

: TaskContext(name)

{

// see later on what to put here.

}

/**

* This function is for the configuration code.

* Return false to abort configuration.

*/

bool configureHook() {

// ...

return true;

}

/**

* This function is for the application's start up code.

* Return false to abort start up.

*/

bool startHook() {

// ...

return true;

}

/**

* This function is called by the Execution Engine.

*/

void updateHook() {

// Your component's algorithm/code goes in here.

}

/**

* This function is called when the task is stopped.

*/

void stopHook() {

// Your stop code after last updateHook()

}

/**

* This function is called when the task is being deconfigured.

*/

void cleanupHook() {

// Your configuration cleanup code

}

};| Important | |

|---|---|

By default, the TaskContext enters the

|

If you want to force the user to call configure() of your TaskContext, set the TaskState in your constructor as such:

class MyTask

: public TaskContext

{

public:

MyTask(std::string name)

: TaskContext(name, PreOperational) // demand configure() call.

{

//...

}

};

When configure() is called, the

configureHook() (which you

must implement!) is executed and must return

false if it failed. The TaskContext drops to the

PreOperational state in that case.

When configureHook() succeeds, the

TaskContext enters the Stopped state

and is ready to run.

A TaskContext in the Stopped state

(Figure 2.5, “

TaskContext State Diagram

”)

may be start()'ed upon which

startHook() is called once and may abort

the start up sequence by returning false. If true, it enters the

Running state and

updateHook() is called (a)periodically by

the ExecutionEngine, see below. When the task is

stop()'ed, stopHook()

is called after the last updateHook() and

the TaskContext enters the Stopped state

again. Finally, by calling cleanup(), the

cleanupHook() is called and the TaskContext

enters the PreOperational state.

The functionality of a component, i.e. its algorithm, is executed

by its internal Execution Engine. To run a TaskContext, you

need to use one of the

ActivityInterface classes from the

RTT, most likely Activity

or SlaveActivity create a threaded

or non-threaded activity which executes your task. This

relation is shown in Figure 2.6, “

Executing a TaskContext

”.

The RTT::Activity class allocates a thread which executes the

Execution Engine. The chosen

ActivityInterface object will invoke

the Execution Engine, which will in turn invoke the

application's hooks above. When created, the TaskContext is assigned

the default Activity by default.

This means it has an internal thread which can receive commands

and process events but does not execute user code in

updateHook() periodically.

Figure 2.6. Executing a TaskContext

You can make a TaskContext 'active' by creating an Activity object which executes its Execution Engine. The Execution Engine delegates all work to specific 'Processors' and user code in updateHook().

A common task in control is executing an algorithm periodically. This is done by attaching an activity to the Execution Engine which has a periodic execution time set.

#include <rtt/Activity.hpp> using namespace RTT; TaskContext* a_task = new MyTask("the_task"); // Set a periodic activity with priority=5, period=1000Hz a_task->setActivity( new Activity( 5, 0.001 )); // ... start the component: a_task->start(); // ... a_task->stop();

Which will run the Execution Engine of "ATask" with a

frequency of 1kHz. This is the frequency at which state

machines are evaluated, program steps taken, commands and

events are accepted and executed and the application code in

updateHook() is run. When the periodic

activity is stopped again, all programs are stopped, state

machines are brought into the final state and no more commands

or events are accepted.

You don't need to create a new Activity if you want to switch

to periodic execution, you can also use the setPeriod

function:

// In your TaskContext's configureHook():

bool configureHook() {

return this->setPeriod(0.001); // set to 1000Hz execution mode.

}

An updateHook() function of a periodic

task could look like:

class MyTask

: public TaskContext

{

public:

// ...

/**

* This function is periodically called.

*/

void updateHook() {

// Your algorithm for periodic execution goes inhere

outPort.Set( inPort.Get() * 2.0 );

}

};You can find more detailed information in ??? in the CoreLib reference.

A TaskContext is run by default by a non periodic RTT:Activity object. This

is useful when updateHook() only needs

to process data when it arrives or must wait on

network connections or does any other blocking operation.

Upon start(), the Execution Engine waits for new Commands or Events to

come in to be executed. Each time such an event happens, the user's

application code (updateHook()) is called each

time an event or command arrives.

An updateHook() function of a non periodic

task could look like:

class MyTask

: public TaskContext

{

public:

// ...

/**

* This function is only called by the Execution Engine

* when 'trigger()' is called or an event or command arrives.

*/

void updateHook() {

// Your blocking algorithm goes inhere

char* data;

double timeout = 0.02; // 20ms

int rv = my_socket_read(data, timeout);

if (rv == 0) {

// process data

this->stateUpdate(data);

}

// This is special for non periodic activities, it makes

// the TaskContext call updateHook() again after

// commands and events are processed.

this->getActivity()->trigger();

}

};| Warning | |

|---|---|

Non periodic activities should be used with care and with much thought in combination with scripts (see later). The ExecutionEngine will do absolutely nothing if no commands or asynchronous events or no trigger comes in. This may lead to surprising 'bugs' when program scripts or state machine scripts are executed, as they will only progress upon these events and seem to be stalled otherwise. |

You can find more detailed information in ??? in the CoreLib reference.

In addition to the PreOperational,

Stopped and Running

TaskContext states, you can use two additional states for more

advanced component behaviour: the FatalError

and the Active states, as in

Figure 2.7, “

Extended TaskContext State Diagram

”

Figure 2.7. Extended TaskContext State Diagram

This figure shows the complete state diagram of a

TaskContext. This is Figure 2.5, “

TaskContext State Diagram

”

extended with two more states: Active

and FatalError.

The FatalError state is entered whenever

the TaskContext's fatal() function is

called, and indicates that an unrecoverable error occured,

possibly in the updateHook() or in any

other component function. The ExecutionEngine is immediately

stopped and stopHook() is called when

this state is entered.

In order to leave the FatalError state,

one needs to call resetError() which calls

resetHook(), the user function, in turn.

When resetHook() returns true, error

recovery was possible and the component becomes

Stopped again. In case

resetHook() returns false, the

TaskContext becomes PreOperational and

requires configuration.

The Active state is for processing

incomming commands and events, but not yet running the

updateHook() user function. It is

used for components that require to accept commands

before they are running. The Active

state is optional and can be skipped.

The Active state is entered when

activate() is called from the

Stopped state. In order to check this

transition the user function

activateHook() is called which must

return true to let the transition succeed, otherwise, the

TaskContext remains Stopped. Once the

TaskContext is Active, it can be

start()'ed (continue to

Running) or stop()'ed

(go back to Stopped).

It is possible that non-fatal run-time errors occur which

may require user action on one hand, but do not prevent

the component from performing it's task, or allow degraded

performance.

Therefor, in the Running state, one can

make a transition to the

RunTimeWarning and RunTimeError

sub-states by calling warning() and

error() respectively. See

Figure 2.8, “

Possible Run-Time failure states.

”

Figure 2.8. Possible Run-Time failure states.

This figure shows the sub-states of the Running state as a UML state chart.

When the application code calls error(),

the RunTimeError state is entered and

errorHook() is executed instead of

updateHook(). If at some moment the

component detects that it can resume normal operation, it

calls the recovered() function, which

leads to the Running state again and in the next iteration,

updateHook() is called again. When

warning() is called, the RunTimeWarning

state is entered and updateHook() is

still executed (there is no

warningHook()). Use

recovered() again to go back to the

Running state.

Use getErrorCount() and

getWarningCount() to read the number of

times the error and warning states were entered. Using these

functions, you can track if any intermittent problem has

occured. The counters are reset when the

cleanup() method is called in the

Stopped state.

Here is a very simple use case, a TaskContext communicates over a socket with a remote device. Normally, we get a data packet every 10ms, but sometimes one may be missing. This is signaled as a run time warning, but we just continue. When we don't receive 5 packets in a row, we signal this as a run time error. From the moment packets come in again we go back to run time warning. Now if the data we get is corrupt, we go into fatal error mode, as we have no idea what the current state of the remote device is, and shouldn't be updating our state, as no one can rely on the correct functioning of the TaskContext.

Here's the pseudo code:

class MyComponent : public TaskContext

{

int faults;

public:

MyComponent(const std::string &name)

: TaskContext(name), faults(0)

{}

protected:

// Read data from a buffer.

// If ok, process data, otherwise, trigger

// a runtime warning. When to many faults occur,

// trigger a runtime error.

void updateHook()

{

Data_t data;

bool rv = mybuf.Pop( data );

if ( rv ) {

this->stateUpdate(data);

faults = 0;

} else {

faults++;

if (faults > 4)

this->error();

else

this->warning();

}

}

// Called instead of updateHook() when in runtime error state.

void errorHook()

{

this->updateHook(); // just call updateHook anyway.

}

// Called by updateHook()

void stateUpdate(Data_t data)

{

// Check for corrupt data

if ( checkData(data) == -1 ) {

this->fatalError(); // we will enter the FatalError state.

} else {

// data is ok: update internal state...

}

}

};

Finally, you start this component with a non periodic Activity, which allows you to wait in updateHook() for as long as you want. Commands and events are processed when you leave the function, that's why you can not use a while(1) {} loop within updateHook(), but re-trigger the activity again for a next run.

When you want to discard the 'warning' state of the component, call mycomp.recovered(). If your component went into FatalError, call mycomp.reset() and mycomp.start() again for processing updateHook() again.

During development of your TaskContext, it is handy to connect the TaskBrowser to your task such that you can interactively manipulate it and it's properties:

#include <ocl/TaskBrowser.hpp> // ... see above TaskBrowser browser(a_task); // Start the interactive console: browser.loop();

In which you can start/stop the task and manipulate every aspect of it's interface, as was seen in the previous section.

A TaskContext exists of a number of access or methods which expose a specific part of the interface. These methods are:

a_task.ports();

a_task.methods();

a_task.attributes();

a_task.properties();

a_task.commands();

a_task.events();

The meaning of these methods are explained in the following sections.

Figure 2.9. TaskContext Interface

The Execution Flow is formed by the processing of commands, methods and events (which call in turn user functions). The Data Flow is the propagation of data from one task to another using ports. Configuration is done using properties and attributes.

| Purpose | |

|---|---|

The 'Data Flow' is a 'stream of data' between tasks which are used to perform calculations. A classical control loop can be implemented using the Data Flow interface. The data is passed buffered or unbuffered from one task to others. A Task can be woken up if data arrives at one or more ports or it can 'poll' for new data on its ports. Reading and writing data ports is always real-time and thread-safe, on the condition that copying your data (i.e. your copy constructor) is as well. |

The Orocos Data Flow is implemented with the Port-Connector software pattern. Each task defines its data exchange ports and inter-task connectors transmit data from one port to another. A Port is defined by a name, unique within that task, the data type it wants to exchange and the buffered or un-buffered method of exchanging. Buffered exchange is done by "Buffer" Ports and un-buffered exchange is done by "Data" Ports.

A Data Port can offer read-only, write-only or read-write access to the unbuffered data. A Buffer Port can offer read-only, write-only and read-write access to the buffered data. Finally, you can opt that new data on selected ports wakes up your task. The example below shows all these possibilities.

| Important | |

|---|---|

The ready-to-execute code of this section can be found in the 'dataflow-task' package on the RTT Source code page of the Orocos.org website. |

Any kind of data can be exchanged (also user defined types) but for readability, only the 'double' C type is used here.

#include <rtt/Ports.hpp>

using namespace RTT;

class MyTask

: public TaskContext

{

// Read-only data port:

ReadDataPort<double> indatPort;

// Write-only data port:

WriteDataPort<double> outdatPort;

// Read-Write data port:

DataPort<double> rwdatPort;

// Read-only buffer port:

ReadBufferPort<double> inbufPort;

// Write-only buffer port:

WriteBufferPort<double> outbufPort;

// Read-Write buffer port:

BufferPort<double> rwbufPort;

public:

// ...

MyTask(std::string name)

: TaskContext(name),

indatPort("Data_R"),

outdatPort("Data_W", 1.0), // note: initial value

rwdatPort("Data_RW", 1.0),

inbufPort("SetPoint_X"),

outbufPort("Packet_1", 15), // note: buffer size

rwbufPort("Packet_2", 30)

{

// an 'EventPort' wakes our task up when data arrives.

this->ports()->addEventPort( &indatPort, "Event driven Input Data Port" );

// These ports do not wake up our task

this->ports()->addPort( &outdatPort, "Output Data Port" );

this->ports()->addPort( &rwdatPort, "Read-Write Data Port" );

this->ports()->addPort( &inbufPort, "Read-Only Buffer Port" );

this->ports()->addPort( &outbufPort, "Write-Only Buffer Port" );

this->ports()->addPort( &rwbufPort, "Read-Write Buffer Port" );

// more additions to follow, see below

}

// ...

};

The example starts with declaring all the ports of MyTask. A template parameter '<double>' specifies the type of data the task wants to exchange through that port. Logically, if two ore more tasks are connected, they must agree on this type. The constructor of MyTask initialises each port with a name. This name can be used to 'match' ports between connected tasks ( using 'connectPorts', see Section 4, “Connecting TaskContexts” ), but it is possible to connect Ports with different names as well.

There are two ways to add a port to the TaskContext interface:

using addPort()

or addEventPort(). In the latter case,

new data arriving on the port will wake up ('trigger') the

activity of our TaskContext and updateHook() get's executed.

If you want to know which port caused the wake-up, do not implement

updateHook() (ie remove this function from your component) and use

updateHook(const std::vector<PortInterface*>& updatedPorts)

which provides you a list of all ports having received new data.

| Note | |

|---|---|

Only ReadDataPort, DataPort,

ReadBufferPort and BufferPort

added with

Adding a WriteDataPort or WriteBufferPort

with In the current implementation, addEventPort() must happen before you start() the TaskContext the first time. Any ports added after your component has been started the first time will not cause them to wake up your component. So even stopping and starting wil not solve this. Therefor, it is recommended to only use addEventPort in your component's contructor (as shown in the example above). This issue is being looked at. |

Write and read-write Buffers take besides a name, the prefered

buffer size as a parameter. In the example, these are the

values 15 and 30. Before the task is connected to its peers,

you can still change this value with the

setBufferSize() function of the Port, for

example in the configureHook() function

of your TaskContext.

Finally, a 'write' port can take an initial value in the constructor as well. This value will be used when the connection between two ports is created in order to initialise the connection ( using 'connectPorts', see Section 4, “Connecting TaskContexts” for a full example). If a 'write' port is connected to an existing connection, the initial value (and buffer size) are ignored and the settings of the existing connection are not touched. You can modify the initial value with the 'Set( value )' function in both Buffer and Data write ports.

The Data Flow interface is used by your task from within

the program scripts or its updateHook()

method. Logically the script or method reads the inbound

data, calculates something and writes the outbound data.

#include <rtt/Ports.hpp>

using namespace RTT;

class MyTask

: public TaskContext

{

// ...Constructor sets up Ports, see above.

bool startHook() {

// Check validity of (all) Ports:

if ( !indatPort.connected() ) {

// No connection was made !

return false;

}

if ( !outdatPort.connected() ) {

// ...

}

return true;

}

/**

* Note: use updateHook(const std::vector<PortInterface*>&)

* instead for having information about the updated event

* driven ports.

*/

void updateHook() {

// Read and write the Data Flow:

// Unbuffered:

double val = indatPort.Get();

// calculate...

outdatPort.Set( val );

// Buffered:

if ( inbufPort.Pop( val ) ) {

// calculate...

} else {

// buffer empty.

}

if ( outbufPort.Push( val ) ) {

// ok.

} else {

// buffer full.

}

}

// ...

};

It is wise to check in the startHook()

( or earlier: in configureHook() )

function if all necessary ports are connected()

( or ready() ).

At this point, the task start up can still be aborted by

returning false. Otherwise, a write to a port

will be discarded, while a read returns the initial value

or the default value. A Pop of a disconnected port will

always return false.

When a Port is connected, it becomes available to the Orocos scripting system such that (part of) the calculation can happen in a script. Also, the TaskBrowser can then be used to inspect the contents of the DataFlow on-line.

| Note | |

|---|---|

In scripting, it is currently not yet possible to know which event port woke your task up. |

A small program script could be loaded into MyTask with the following contents:

program MyControlProgram {

double the_K = K // read task property, see later.

double setp_d

while ( true ) {

if ( SetPoint_X.Pop( setp_d ) ) { // read Buffer Port

double in_d = Data_R.Get() // read Data Port

double out_d = (setp_d - in_d) * K // Calculate

do Data_W.Set( out_d ) // write Data Port

}

do nothing // this is a 'wait' point.

}

} The program "MyControlProgram" starts with declaring two variables and reading the task's Property 'K'. Then it goes into an endless loop, trying to Pop a set point value from the "SetPoint_X" Buffer Port. If that succeeds (buffer not empty) the "Data_R" Data Port is read and a simple calculation is done. The result is written to the "Data_W" Data Port and can now be read by the other end. Alternatively, the result may be directly used by the Task in order to write it to a device or any non-task object. You can use methods (below) to send data from scripts back to the C++ implementation.

Remark that the program is executed within the Execution

Engine. In order to avoid the endless loop, a 'wait' point

must be present. The "do nothing" command inserts such a

wait point and is part of the Scripting syntax. If you plan

to use Scripting state machines, such a

while(true) loop (and hence wait point)

is not necessary. See the Scripting Manual for a full

overview of the syntax.

| Purpose | |

|---|---|

A task's methods are intended to be called 'synchronously' by the caller, i.e. are directly executed like a function in the thread of the caller. Use it to 'calculate' a result or change a parameter. Calling methods is real-time but not thread-safe and should for a running component be guarded with a Mutex if it's functionality requires so. |

The easiest way to access a TaskContext's interface is through Methods. They resemble very much normal C or C++ functions, but they have the advantage to be usable in scripting or can be called over a network connection. They take arguments and return a value. The return value can in return be used as an argument for other Methods or stored in a variable. For all details, we refer to the Orocos Scripting Manual.

To add a TaskContext's method to the method interface, one proceeds similarly as when creating Data Ports. The data type is now replaced by a function signature, for example '

void(int, double)

' which is the signature of a function returning 'void' and having two arguments: an 'int' and a 'double'.

#include <rtt/Method.hpp>

using namespace RTT;

class MyTask

: public TaskContext

{

public:

void reset() { ... }

string getName() const { ... }

double changeParameter(double f) { ... }

// ...

Method<void(void)> resetMethod;

Method<string(void)> nameMethod;

Method<double(double)> paramMethod;

MyTask(std::string name)

: TaskContext(name),

resetMethod("reset", &MyTask::reset, this),

nameMethod("name", &MyTask::getName, this),

paramMethod("changeP", &MyTask::changeParameter, this)

{

// Add the method objects to the method interface:

this->methods()->addMethod( &resetMethod, "Reset the system.");

this->methods()->addMethod( &nameMethod, "Read out the name of the system.");

this->methods()->addMethod( ¶mMethod,

"Change a parameter, return the old value.",

"New Value", "The new value for the parameter.");

// more additions to follow, see below

}

// ...

};

In the above example, we wish to add 3 class functions to the method interface: reset, getName and changeParameter. This can be done by constructing a Method object with the correct function signature for each such class function. Each Method object is initialised in the constructor with a name ("reset"), a pointer to the class function (&MyTask::reset) and a pointer to the class object (this). This setup allows the method objects resetMethod, nameMethod and paramMethod to be invoked just like one would call the functions directly.

After the method objects are constructed, we add methods to the method interface using the addMethod() function. The addMethod() function requires a a method object (&resetMethod), a description ("Reset the system.") and a name, description pair for each argument (such as in changeParameter).

Using this mechanism, any method of any class can be added to a task's method interface.

In order to easily invoke a task's methods from a C++ program, only only needs a pointer to a TaskContext object, for example using the 'getPeer()' class function.

// create a method:

TaskContext* a_task_ptr;

Method<void(void)> my_reset_meth

= a_task_ptr->methods()->getMethod<void(void)>("reset");

// Call 'reset' of a_task:

reset_meth(); Methods can also be given arguments and collect return values. Both constant arguments and variable arguments are supported.

// used to hold the return value:

string name;

Method<string(void)> name_meth =

a_task_ptr->methods()->getMethod<string(void)>("name");

// Call 'name' of a_task:

name = name_meth();

cout << "Name was: " << name << endl;

// hold return value.

double oldvalue;

Method<double(double)> mychange_1 =

a_task.methods()->create("changeP");

// Call 'changeParameter' of a_task with argument '1.0'

oldvalue = mychange_1( 1.0 );

// oldvalue now contains previous value.Up to 4 arguments can be given. If the signature was not correct, the method invocation will be ignored. One can check validity of a method object with the 'ready()' function:

Method<double(double)> mychange_1 = ...; assert( mychange_1.ready() );

The arguments can be of any class type and type qualifier (const, &, *,...). However, to be compatible with the Orocos Program Parser variables, it is best to follow the following guidelines :

Table 2.1. Method Return & Argument Types

| C++ Type | In C++ functions passed by | Maps to Parser variable type |

|---|---|---|

| Primitive C types : double, int, bool, char | value (no const, no reference ) | double, int, bool, char |

| C++ Container types : std::string, std::vector<double> | const & | string, array |

| Orocos Fixed Container types : RTT::Double6D, KDL::[Frame | Rotation | Twist | ... ] | const & | double6d, frame, rotation, twist, ... |

Summarised, every non-class argument is best passed by value, and every class type is best passed by const reference. The parser does handle references (&) in the arguments or return type as well.

| Purpose | |

|---|---|

A task's attributes and properties are intended to configure and tune a task with certain values. Properties have the advantage of being writable to an XML format, hence can store 'persistent' state. For example, a control parameter. Attributes are lightweight values which can be read and written during run-time, and expose C++ class members to the scripting layer. Reading and writing properties and attributes is real-time but not thread-safe and should for a running component be limited to the task's own activity. |

A TaskContext may have any number of attributes or properties, of any type. They can be used by programs in the TaskContext to get (and set) configuration data. The task allows to store any C++ value type and also knows how to handle Property objects. Attributes are plain variables, while properties can be written to and updated from an XML file.

An attribute can be added in the task's interface (AttributeRepository) like this :

#include <rtt/Property.hpp>

#include <rtt/Attribute.hpp>

class MyTask

: public TaskContext

{

Attribute<bool> aflag;

Attribute<int> max;

Constant<double> pi;

Property<std::string> param;

Property<double> value;

public:

// ...

MyTask(std::string name)

: TaskContext(name),

param("Param","Param Description","The String"),

value("Value","Value Description", 1.23 ),

aflag("aflag", false),

max( "max", 5 ),

pi( "pi", 3.14 )

{

// other code here...

this->attributes()->addAttribute( &aflag );

this->attributes()->addAttribute( &max );

this->attributes()->addConstant( &pi );

this->properties()->addProperty( ¶m );

this->properties()->addProperty( &value );

}

// ...

};

Which inserts an attribute of type bool and int, name 'aflag' and 'max' and initial value of false and 5 to the task's interface. A constant 'pi' is added as well. The methods return false if an attribute with that name already exists. Adding a Property is also straightforward. The property is added in a PropertyBag.

To get a value from the task, you can use the set() and get() methods :

bool result = aflag.get();

assert( result == false );

param.set("New String");

assert( param.get() == "New String" );While another task can access it through the attributes() interface:

Attribute<bool> the_flag = a_task->attributes()->getAttribute<bool>("aflag");

assert( the_flag.ready() );

bool result = the_flag.get();

assert( result == false );

Attribute<int> the_max = a_task->attributes()->getAttribute<int>("max");

assert( the_max.ready() );

the_max.set( 10 );

assert( the_max.get() == 10 );The attributes 'the_flag' and 'the_max' are called 'mirrors' of the original attributes of the task.

See also Section 7, “Properties” in the Orocos CoreLib reference.

A program script can access the above attributes as in

// a program in "ATask" does : var double pi2 = pi * 2. var int myMax = 3 set max = myMax set Param = "B Value"

// an external (peer task) program does : var double pi2 = ATask.pi * 2. var int myMax = 3 set ATask.max = myMax

When trying to assign a value to a constant, the script parser will throw an exception, thus before the program is run. You must always specify the task's name (or 'task') when accessing a task's attribute, this is different from methods and commands, which may omit the task's name if the program is running within the task.

| Important | |

|---|---|

The same restrictions of Section 3.10, “Method Argument and Return Types” hold for the attribute types, when you want to access them from program scripts. |

See also Section 6, “Attributes” in the Orocos CoreLib reference.

See Section 5.1, “Task Property Configuration and XML format” for storing and loading the Properties to and from files, in order to store a TaskContext's state.

| Purpose | |

|---|---|

A task's command functions are intended to be executed 'in the owner's thread', thus are executed asynchronously with respect to the caller. Use it to 'reach a goal' or do a lengthy calculation in the receiver. Command functions are, in contrast with methods, executed by the receiver's thread Calling and executing commands is real-time and thread-safe with respect to updateHook, scripting etc running in the receiving component. |

The command interface is very similar to the Method interface above. The diffence with methods are:

The 'command function' of the TaskContext is executed in the thread of the receiving TaskContext. Is is an asynchronous function call.

The arguments of the 'command function' are stored in a command object which makes them available when the function is finally executed in the receiving thread. This allows multiple commands to be queued at the same time with different arguments.

The return value of a command function must always be a bool.

There is a second function, the 'completion condition' which is called to check if the command's effect is done. Take a command to move to a position for example. The 'command function' programs the target position, while the 'completion condition' will check if the target position has been reached, long after the command function has been executed. Commands will often work together with the

updateHook()function which further processes the command's data.

To add a command to the Command Interface, one must create Command, objects :

#include <rtt/Command.hpp>

class MyTask

: public TaskContext

{

protected:

/**

* The first command starts a 'cycle'.

*/

bool startCycle() { ... }

/**

* The completion condition checks if updateHook() has

* finished processing this command.

*/

bool cycleDone() const { ... }

Command<bool(void)> cycleCommand;

/**

* This command programs the Task to go to a position.

*/

bool gotoPosition(double p) { ... }

/**

* The completion condition checks if updateHook() has

* finished processing this command.

*/

bool positionReached(double p) const { return p == cur_pos; }

Command<bool(double)> gotoCommand;

/**

* The commands 'program' the TaskContext, the

* updateHook function, finishes off the command

* over a period of time.

*/

void updateHook() {

if ( inCycleMode() ) {

nextCycleStep();

}

if ( inGotoMode() ) {

incrementPosition();

}

}

public:

MyTask(std::string name)

: TaskContext(name),

cycleCommand("startCycle",

&MyTask::startCycle,

&MyTask::cycleDone, this),

gotoCommand( "gotoPosition",

&MyTask::gotoPosition,

&MyTask::positionReached, this)

{

// ... other startup code here

this->commands()->addCommand( &cycleCommand,

"Start a new cycle.");

this->commands()->addCommand( &gotoCommand,

"Goto a position.",

"pos", "A position endpoint.");

}

};Clearly, commands differ from Methods in that they take an extra function which is called the completion condition. It is a function which returns true when the command's effect is done. The command itself also returns a boolean which indicates if it was accepted or not. Reasons to be rejected can be faulty arguments or that the system is not ready to accept a new command.

The Command object requires two function

pointers instead of one, which must both return a 'bool'. The

first one is the command function that does the actual work,

and the completion condition is a function having :

exactly the same arguments as the command,

OR only the first argument of the command,

OR no arguments at all.

Analogous to addMethod(),

addCommand adds the Command objects to

the TaskContext interface and also requires a string

describing the command, and two strings giving a name and

description for every argument.

Once a command is added to a TaskContext's interface, other tasks can make use of that command.

The Command class can be used to invoke commands as well. You can get such object from a task's interface:

Command<bool(void)> mycom

= a_task.commands()->getCommand<bool(void)>("startCycle");

// check if the command is ok:

assert( mycom.ready() );

// Send 'startCycle' to a_task (asynchronous).

bool result = mycom();

// next, check its status:

bool accepted = mycom.accepted(); // accepted by execution engine?

bool valid = mycom.valid(); // command was valid (well-formed)?

bool done = mycom.done(); // command was done?

Such commands can also be given arguments. Both constant arguments and variable arguments are supported:

// get a command:

Command<bool(double)> myGoto_1 =

a_task.commands()->getCommand<bool(double)>("gotoPosition");

bool d_arg = 5.0;

// Send 'gotoPosition' to a_task, reads contents of d_arg.

bool result_2 = myGoto_1(d_arg);The current implementation supports up to 4 arguments. Since the use of 'structs' is allowed, this is enough for most applications.

The above lets you write in a program script :

do startCycle() do gotoPosition( -3.0 )

when the program is loaded in a_task.

Commands returning false will propagate that error to the program or function calling that command, which will cause the program script to enter an error state, i.e. it stops its execution.

| Important | |

|---|---|

The same restrictions of Section 3.10, “Method Argument and Return Types” hold for the command and condition types, when you want to access them from program scripts. |

See also Section 3, “Commands” in the Orocos CoreLib reference.

| Purpose | |

|---|---|

A task's events are intended to be 'emitted', thus published by the task to subscribers. Use it to 'notify' interested parties of a change in the system. It allows you to have one or more functions called when an event happens. Publishing and reacting to an event is real-time. Only 'asynchronous' callbacks are thread-safe with respect to updateHook, scripting etc running in the receiving component. |

A task may register its events in its interface in order to be used by its state machines and other tasks as well. Events are defined and explained in the Orocos CoreLib reference, Section 4, “Events”.

Events can be easily added to a task's interface, much like methods are:

#include <rtt/Event.hpp>

class MyTask

: public TaskContext

{

// An event with a bool argument:

Event< void(bool) > turnSwitch;

// An event with three arguments:

Event< bool(double, double, double) > moveAxis;

public:

MyTask(std::string name)

: TaskContext(name),

turnSwitch( "turnSwitch" ),

moveAxis( "move" )

{

// ... other startup code here

// add it to the task's interface:

this->events()->addEvent( &turnSwitch,

"Turn switch description",

"d","Direction" );

this->events()->addEvent( &moveAxis,

"Move the axis."

"x","X axis position",

"y","Y axis position",

"z","Z axis direction");

}

};An Event object has the signature ('void(bool)') of the 'callback function' it will call when the event is 'emitted' (or 'fired'). The object is initialised with a name ("turnSwitch") and added to the interface ('addEvent').

Once events are added, they can be emitted using the Event object.

Event< bool(double, double, double) > move_event = a_task.events()->getEvent( "move" ); assert( move_event.ready() ); // emit the event 'move' with given args: move_event(1.0, 2.0, 3.0); // or with variable arguments: double a = 0.3, b = 0.2, c = 0.1; move_event(a, b, c);

Analogous to emitting an event, one can also react to an event in C++, using the Event interface. Event connections can be accessed through the Handle object. The first example shows how to setup a synchronous connection to the event of the previous examples:

#include <boost/bind.hpp>

/**

* Example: Connect a class method to an Event.

*/

class Reactor

{

public:

bool react_callback(double a1, double a2, double a3) {

// use a1,a2, a3

return false; // return value is ignored.

}

};

/**

* Example: Connect a 'C' function to an Event.

*/

bool foo_callback( double a1, double a2, double a3 ) {

// use a1, a2, a3

return false; // ignored.

}

// Class callback:

Reactor r;

// WARNING: this requires events to be registered to

// the scripting interface (see warning note below).

Handle h

= a_task.events()->setupConnection("move")

.callback( &r, &Reactor::react_callback )

.handle();

assert( h.ready() );

h.connect(); // connect to event "move"

move_event(1.0, 2.0, 3.0); // see previous example.

// now Reactor::callback() was called.

h.disconnect(); // disconnect again.

// 'C' Function callback:

// WARNING: this requires events to be registered to

// the scripting interface (see warning note below).

h = a_task.events()->setupConnection("move")

.callback( &foo_callback )

.handle();

h.connect();

move_event(4.0, 5.0, 6.0)

// now foo_callback is called with arguments. | Warning | |

|---|---|

The RTT makes the distinction between adding a primitive to

the C++ interface and adding to the C++ & scripting

interface. For using the event callback mechanism, you

always need to add to the C++ & scripting interface,

since it requires that functionality. You must use

|

| Note | |

|---|---|

Using the |

Analogous to the event example in the CoreLib reference

(Section 4, “Events”),

a class funcion is made to react to the event. A connection

is setup between the "move" event and the react_callback

function of "r". The connection can be controlled using

the handle to connect or disconnect the reaction to events.

When connect() is called, every

event invocation will call react_callback()

with the given arguments. Using a 'C' function works analogous as shown

above.

A second example continues, but uses an asynchronous connection. First a new task (b_task) is created which will handle the event asynchronously. During setup, the EventProcessor of b_task's Execution Engine is used to process the event.

TaskContext b_task("BTask"); b_task.setActivity( new Activity(5, 0.1) ); // priority, period ptask_b.run( &b_task ); ptask_b.start(); // WARNING: see the warning above about using callback Handle h3 = a_task.events()->setupConnection("move") .callback(&r, &react_callback, b_task.engine()->events() ).handle(); assert( h3.ready() ); h3.connect(); // connect asynchronously to event "move" move_event.emit(); // see previous example. // wait a bit... // now react_callback() was called from within b_task's execution engine.

Note that after passing the object and function, the EventProcessor

of b_task is added in the callback method, such

that the callback is executed in b_task's thread.

Events are as easy to use as methods (above) from within scripts, using the keyword do:

do ATask.move( 1.0, 2.0, 3.0 )

It is also possible to react to events from within a state machine in order to change state. We refer to the Program Parser Manual for syntax and examples.

A Real-Time system exists of multiple concurrent tasks which must communicate to each other. TaskContext can be connected to each other such that they can communicate Real-Time data.

The addPeer and connectPeers

functions are used to connect TaskContexts and allow them

to use each other's interface. The connectPorts

function sets up the data flow between tasks.

We call connected TaskContexts "Peers" as there is no fixed

hierarchy. A connection from one TaskContext to its

Peer can be uni- or bi-directional. In a uni-directional connection (addPeer ),

only one peer can use the interface of the other, while

in a bi-directional connection (connectPeers), both can use

each others interface.

This allows to build strictly hierarchical topological

networks as well as complete flat or circular networks or any

kind of mixed network.

Peers are connected as such (hasPeer takes a string

argument ):

// bi-directional :

connectPeers( &a_task, &b_task );

assert( a_task.hasPeer( &b_task.getName() )

& b_task.hasPeer( &a_task.getName() ) );

// uni-directional :

a_task.addPeer( &c_task );

assert( a_task.hasPeer( &c_task.getName() )

& ! c_task.hasPeer( &a_task.getName() ) );

// Access the interface of a Peer:

Method<bool(void) m = a_task.getPeer( "CTask" )->methods()->getMethod<bool(void)>("aMethod");

// etc. See interface usage in previous sections.

Both connectPeers and addPeer

allow scripts or C++ code to use the interface of a connected Peer. connectPeers

does this connection in both directions.

From within a program script, peers can be accessed by merely prefixing their name to the member you want to access. A program within "ATask" could access its peers as such :

var bool result = CTask.aMethod()

The peer connection graph can be traversed at arbitrary depth. Thus you can access your peer's peers.

Data Flow between TaskContexts is setup by using connectPorts.

The direction of the data flow is imposed by the read/write direction of

the ports. The connectPorts(TaskContext* A, TaskContext* B) function

creates a connection between TaskContext ports when both ports

have the same name and type. It will never disconnect existing connections

and only tries to add ports to existing connections or create new

connections.

Before calling connectPorts, one may connect individual ports,

e.g., when the portnames do not match or when complexer data

flow networks need to be formed. Suppose that Task A has a

port a_port, Task B a b_port and Task C a c_port (all are of type PortInterface*). Then

(shared) connections are made as follows:

b_port->connectTo( a_port ); c_port->connectTo( a_port );

Note that the order of ports matters; the following would NOT work:

a_port->connectTo( b_port ); // ok... a_port->connectTo( c_port ); // returns false !

It would leave c_port unconnected, because a_port already has a connection and refuses to connect in that case.

| Note | |

|---|---|

Only use this note if you're an advanced user: In case you want to override buffer/port parameters when you connect ports, you can connect ports in your main() program by writing: #include <rtt/ConnectionFactory.hpp> // ... int buf_size = 100; ConnectionFactory<double> cf; // creates an 'unconnected' connection object: ConnectionInterface::shared_ptr con = cf.createBuffer(a_task->ports()->getPort("SetPoint_X"), b_task->ports()->getPort("SetPoint_X"), buf_size); // use createDataObject() for data ports. // if all went well, 'connect' the connection object: if (con) con->connect(); // this is mandatory ! Do this before the ports are connected. Then connect all other necessary ports to that connection, using c_port.connectTo( con ); . |

Example 2.1. TaskContext Data Flow Topology Example

This diagram shows some possible topologies. Four tasks, "A", "B", "C" and "D" have each a port "MyData" and a port "MyData2". The example demonstrates that connections are always made from writer (sender) to reader (receiver).

Example data flow networks.

The first network has two writers and two readers for "MyData". It can be formed starting from "A" and adding "B","C" and "D" as peers of "A" respectively. Since the network started from "A" all peers share the same connection. The same network could have been formed starting from "B". The second diagram connects "A" to "C" and then "B" to "D". Two connections are now made and if the application tries to connect "B" to "C", this will fail since the "MyData" Port of "C" already participates in a connection.

The third network has one writer and three readers for "MyData2". It can now only be formed starting from "D" and adding "A","B" and "C" as peers to "D". Combining both network one and three is possible by merely invoking all the 'addPeer' methods in the correct order.

connectPorts tries to create connections in

both directions. If a task's Port already has a connection, any

task with compatible, unconnected ports will be added to that

connection. For example, if "a_task" and "b_task" exchange a

Data type "Data_X", and "c_task" reads "Data_X", the

connectPorts will forward "Data_X" to "c_task"

as well.

Tasks can be disconnected from a network by invoking

disconnect() on that task. It will

inform all its peers that it has left the network and

disconnect all its ports. This does not mean that

all data flow connections are deleted. As long as one task's

port still participates in a connection, the connections

exist. When the last port disconnects, the data flow connection

is cleaned up as well.

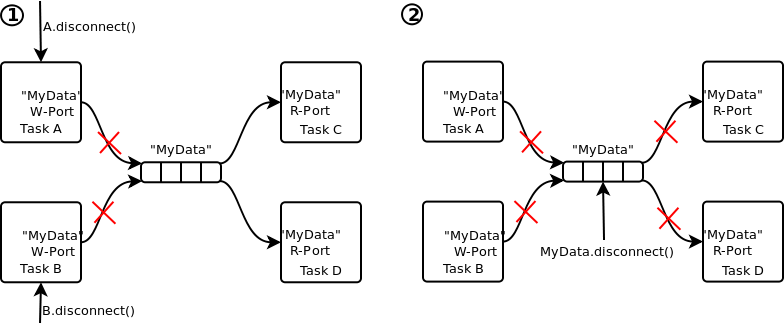

Example 2.2. TaskContext Peer Disconnection Example

(1) shows what would happen if tasks "A" and "B" are disconnected from the network. (2) shows what would happen if the connection itself is disconnected.

Disconnecting tasks: one can disconnect a whole task or disconnect only a port or connection of a task.

When A.disconnect() is called (1),

it removes its participation from the "MyData" connection.

The same happens for B.disconnect().

"C" and "D" read then from a connection which has no more

writers. Adding "A" again to the network would make "A"

again a writer of "MyData". If both "C" and "D" call disconnect

as well, the "MyData" connection is cleaned up.

a_task.disconnect();

assert( !a_task.hasPeer( &b_task.getName() )

&& !b_task.hasPeer( &a_task.getName() );

b_task.disconnect();

assert( !c_task.hasPeer( &b_task.getName() )

&& ! d_task.hasPeer( &b_task.getName() );

Data Flow connections can be disconnected (2) as well, in which case all ports are disconnected.

ConnectionInterface::shared_ptr con = a_task.ports()->getPort("MyData")->connection();

if (con)

con->disconnect();

assert( !a_task.ports()->getPort("MyData").connected() );

assert( !b_task.ports()->getPort("MyData").connected() );

assert( !c_task.ports()->getPort("MyData").connected() );