Main Page

This is the Main Orocos.org Wiki page.

From here you can find links to all Orocos related Wiki's.

Orocos Wiki pages are organised in 'books'. In each book you can create child pages, edit them and move them around. The Wiki itself creates an overview of the child pages of each book.

To create a new page, click 'Add Child page' below. To edit a page, click on the Edit tab of that page. You can also write down a link to a to be written page using the Example Page syntax. Click below to read the rest of this post.This is the Main Orocos.org Wiki page.

From here you can find links to all Orocos related Wiki's.

Orocos Wiki pages are organised in 'books'. In each book you can create child pages, edit them and move them around. The Wiki itself creates an overview of the child pages of each book.

To create a new page, click 'Add Child page' below. To edit a page, click on the Edit tab of that page. You can also write down a link to a to be written page using the Example Page syntax. When that link is clicked and the page does not exist, one is offered to create it and write it.

Currently, the Orocos wiki pages are written in MediaWiki style. You should create your pages in this style as well.

Feel free to click on the 'Edit' tab above to see how this page was written (and to improve it ! ).

Development

This section covers all development related pages.

Contributing

How you can get involved and contribute & participate in the Orocos project

Resources

Development strategy

The Orocos toolchain uses git, with the official repositories hosted at gitorious.

Branches

The various branches are- master : main development line, latest features. Replaces rtt-2.0-mainline

- toolchain-2.0 : stable release branch for the 2.0 release series.

- rtt-1.0-svn-patches: remains for svn bridge of 1.x release series

- ocl-1.0-svn : remains for svn bridge of 1.x release series

The master branch gets updated when new branches are merged into it by its maintainer. This can be a merge from the bugfix branches (ie merge from toolchain-2.x) or a merge from a development branch.

The stable branch should always point to the latest toolchain-2.x tip. This isn't automated, and so it lags (probably something for a hudson job or a git commit hook).

All branches in the rtt-2.0-... are no longer updated. The rtt-2.0-mainline has been merged with master, which means that if you have a rtt-2.0-mainline branch, you can just do git pull origin master, and it will fast-forward your tree to the master branch, or you checkout the local master.

Contributing packages

You may contribute a software package to the community. It must respect the rules set out in the Component Packages section. Packages general enough can be adopted by the Orocos Toolchain Gitorious project. Make sure that your package name only contains word and number characters and underscores. A 'dash' ('-') is not acceptable in a package name.

Contributing patches

Small contributions should go on the mailing lists as patches. Larger features are best communicated using topic branches in a git repository clone from the official repositories. Send pull requests to the mailing lists. These topic branches should be hosted on a publicly available git server (e.g. github, gitorious).

NB for the Orocos v1, no git branches will be merged (due to SVN), use individual patches instead. v2 git branches can be merged without problems.

Making suggestions

The easiest way to make suggestions is to use the mailing list (register here). This allows discussion about what you are suggesting (which after all, someone else may already be working), as well as informing others of what you are interested in (or are willing to do).

Reporting bugs

Before reporting a bug, please check the Bug Tracker and in the Mailing list and Forum to see whether this is a known issue. If this is a new issue then TBD email the mailing lists, OR enter an issue in the bug tracker

Orocos developers meetup at ERF

Goals of the meeting: discuss the future or the Orocos toolchain w.r.t. Rock and ROS.

Identified major goals

- make Orocos components usable across all use cases (ROS, Orocos and Rock). There should be no "rock-only" or "ros-only" components

- make as much as the Rock toolchain usable with "plain Orocos components" (see below)

- make the parallel usage of the three cases (Orocos toolchain, Orocos in ROS and Rock) as painless as possible

Sharing installations of the orocos toolchain

The main issue is the ability to compile the toolchain and components only once, e.g. in a Rock installation, and use them in ROS or vice-versa.

- already existing ignore_packages mechanism. Need to create a wiki page on how to set it up for sharing an orocos installation. Manual, but should be working.

- more automatic mechanisms:

- allow dependencies between autoproj installations. Fully automatic for orocos toolchain/Rock interoperability

- point to a prefix and have autoproj find out things (for ROS installs)

Sharing Orocos components across use-cases

Using rock components on plain Orocos should just work [needs testing and documentation].

Using rock tools on plain Orocos

The use of orogen or typegen would be required

- as far as we know, there is no missing "core" functionality in orogen to make typegen usable for "core" orocos libraries like KDL. Need to make some functionality such as opaques available to typegen though (only available to orogen currently). This can be done through the ability to make typegen load an oroGen SL file (trivial)

- allow passing -I options directly to both oroGen and typeGen

- mechanism to define "side-loading" typekits that define constructors and operators separately for scripting

- the core of the Rock tooling is orocos.rb. Need to test and update orocos.rb so that it can work without a model. Method: update the test suite by mocking TaskContext#model to return nil and/or getModelName to not exist. From there, test tools like oroconf and vizkit

Dataflow between ROS and Rock / plain Orocos

- need data conversions: one must be able to publish a C++ type over a ROS topic and vice-versa

- typegen generation for ROS messages

- type specification when creating ROS streams (since the ROS topic and the orocos port might have different types)

- type conversions on the data flow: use already existing constructor infrastructure to do the conversion, need to create the channel element and change the connection code

- add type conversion support in oroGen (equivalent system than opaques)

Other discussed topics

- make TypeInfo very thin so that we can register it once per type and never change it. Only transports / constructors / ... could then be overriden

- make the deployer a library

Roadmap

Where things are going, and how we plan to get there.

See also Roadmap ideas 3x for some really long-term ideas ...

TODO Autoproj, RTT, OCL, etc.

Real-time logging

The goal is to provide a real-time safe, low overhead, flexbible logging system useable throughout an entire system (ie within both components and any user applications, like GUIs).

We chose to base this on log4cpp, one of the C++-based derivates of log4j, the respected Java logging system. With only minor customimzations log4cpp is now useable in user component code (but not RTT code itself, see below). It provides real-time safe, hierarchical logging with multiple levels of logging (e.g. INFO vs DEBUG).

Near future

- Provide a complete system example demonstrating use of the real-time logging framework in both user components, and a GUI-based application. Based on the v2 toolchain.

- Provide a component-based appender demonstrating transport of logging events. Most likely demonstrate centralized logging of a distributed system.

- Add logging system stress tests. (l already have this for v1, but need to port to v2 and submit)

- Able have multiple appenders per category. This is simply a technical limitation of the initial approach, and should be readily changeable.

Long term plans

- Replace the existing RTT::Logger functionality with the real-time logging framework. This really can't involve rewriting all the logging statements in RTT, etc.

- Provide levels of DEBUG logging. Some logging system use FINE, FINER, FINEST levels, whilst others use DEBUG plus an integer level within debug (e.g. debug-1 thru debug-9, from verbose to most-verbose). Chose one approach, and modify log4cpp to support it.

- Support use by scripting and state machines (possibly also Lua?). This means both being able to log, as well as being able to configure categories, appenders, etc.

Catkin-ROS build-support plan

Target versions

These changes are for Toolchain >= 2.7.0 + ROS >= Hydro

Goals

Support building in these workflows:

- Autoproj managed builds (Rock-style)

- depends on: manifest.xml for meta-build info.

- Rock users don't use the UseOrocos.cmake macros since their CMakeLists +pc files get generated anyway by orogen.

- CMake managed builds (every-package-its-own-build-dir-style)

- depends on: pkg-config files to track linking+includes

- Uses the UseOrocos.cmake file, autolinking is done by parsing manifest.xml file

- ROSbuild managed builds

- depends on: manifest.xml file, rosbuild cmake macros read it and .pc files, Orocos cmake macros read it to link properly (no build flags from manifest file !)

- Will only be used if the user explicitly called rosbuild_init() in his top-level CMakeLists.txt

- Catkin managed builds

- depends on: package.xml file, Orocos generated pkg-config files

- The Catkin .pc files will be generated but ignored by us (?)

- No Auto linking

- We'll also generated .pc files in the devel path during the cmake run

Effects on (Runtime) Environment

- Deployer's import ?

- ROS Deb packages ?

- Orocos Deb packages ?

CMake changes or new macros

- Auto linking

- orocos_use_package()

- orocos_find_package()

- orocos_generate_package()

Roadmap ideas for 3.x

While the project is still in the (heavy?) turmoil of the 1.x-to-2.x transition, it might be useful to start thinking about the next version, 3.x. Below are a number of developments and policies that could eventually become 3.x; please, use the project's (user and developer) mailinglists to give your opinions, using a 3.x Roadmap message tag.

Disclaimer: there is nothing official yet about any of the below-mentioned suggestions; on the contrary, they are currently just the reflections of one single person, Herman Bruyninckx. (Please, update this disclaimer if you add your own suggestions.)

General policies to be followed in this Roadmap:

- the anti-Not Invented Here policy: whenever there exists a FOSS project that has already a solution for (part of) this roadmap, we should try to cooperate with that project, instead of putting efforts in our own version.

- the big critical mass projects first policy: when being confronted with the situation above, it is much preferred to cooperate with (contribute to) projects that have a high critical mass (Cmake, Linux, Eclipse, Qt, etc.) instead of with single-person or single-team projects, even when the latter currently have better functionalities and ideas. At the same time, promising single-person projects will be stimulated to make their efforts useful in a larger critical mass project.

Orocos distribution

Much can be improved to bring Orocos closer to users, and the concept of a simple-to-install distribution is a proven best practice. However, Orocos should not try to develop its own distribution, but should rather hook on to existing, successful efforts. ROS is the obvious first choice, and the orocos_toolchain_ros is the concrete initiative that has already started in this direction. However, this "only" makes "some" relevant low-level Orocos functionality available in a form that is easier to install for many robotics users; in order to allow users to profit from all Orocos functionality, the following extra steps have to be set:- a "Hello Robot!" application, installable as a ROS stack. It could contain a simulated robot, visualised in Morse or Gazebo, and componentized in an RTT component, together with an RTT/KDL/BFL-based set of motion controllers and estimators. (Morse is currently the most promising candidate, from a component-based development point of view.)

- a (Wiki) book that explains the whole setup, not just from a software point of view, but also a motivation why the presented example could be considered as a "best practice" as a robotics system. This Wiki book should not be an Orocos-only effort, but be useful for the whole community.

- a similar "Hello Machine!" application, targeting not the robotics community, but the mechatronics, or machine tools community.

Contributors to this part of the Roadmap need not be RTT developers, but motivated users!

RTT

The road towards better decoupling, as started in 2.x, is designed and implemented further:- RTT will get a complete and explicit component model, existing of component, composite component, connection, port, interface, discrete behaviour, and communication:

- The OROMACS development at the University of Twente have already produced a core of the composite component. That concept is required for a full support of the Model-Driven Engineering approach.

- the connection is the data-less, event-less and command-less representation of the architecture of a system, consisting of only the identification of which components will interact with each other.

- the difference between a port and an interface is that a port belongs to a component, and implements an interface; the interface in itself must become a first-class citizen of the component model.

- discrete behaviour is the current state machine. Further developments in this context are probably only to be expected at the implementation and tooling front.

- communication: Orocos has had, from day one, the ambition to not provide communication middleware, since there are so many other projects that do that. RTT should, however, improve its decoupling of (i) using data structures inside a component, (ii) providing them for communication in a port, and (iii) transporting them from one component's port to another component's port. Maybe this is as easy as cleanly separating the configuration files for all three aspects; maybe it's more involved than that.

- the mapping on real hardware resources (computational thread, communication field bus) is separated from the definition of a component.

- the process of defining data flow data structures is supported by an IDL language. This IDL has to be chosen together with other projects, and should not be an Orocos-only effort. A real IDL includes the definition of the meaning of the fields in the data, and not just their computer language representation.

- the codel idea of GenoM3 is supported for the construction of continuous behaviour inside a component. The important role of the codel idea in the context of realtime systems is that one should give the component designer full control over when which computations are to be executed (instead of relying on the OS scheduler); this requires a design in which computations can be subdivided in pre-emptible pieces (codels), and in which they can be scheduled in efficient Directed Acyclic Graphs.

Contributors to this part of the Roadmap need be RTT developers!

BFL, KDL, SCL

SCL does not yet exist, but there is a high and natural need for a Systems and Control Library, next to BFL and KDL.All three libraries share a common fundamental design property, and that is that they can all be considered as special cases of executable graphs, so a common support will be developed for the flexible, configurable scheduling off all computations (codels) in complex networks (Bayesian networks, kinematic/dynamic networks, control diagrams).

Contributors to this part of the Roadmap need not be RTT developers, but domain experts that have become power users of the RTT infrastructure!

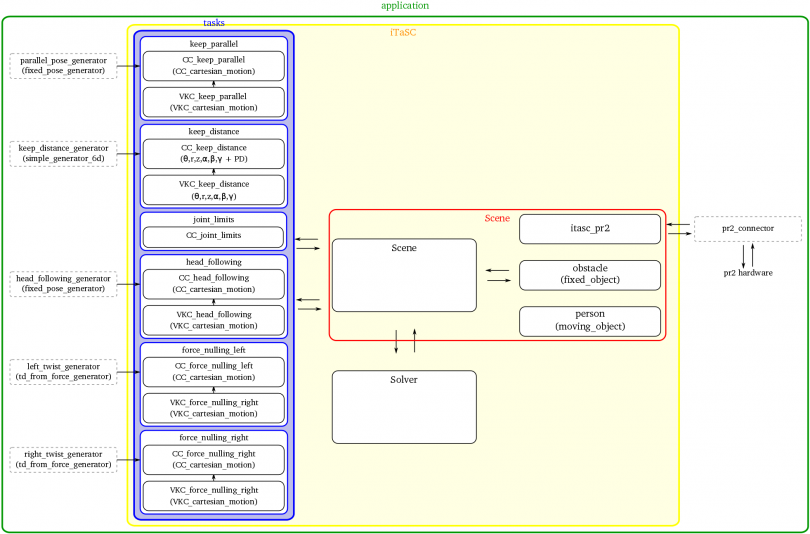

iTaSC and beyond

A usable robotics control systems consists, of course, not only of RTT, BFL, KDL and/or SCL components, but there is an obvious need for a task primitive: the brain that contains all the knowledge about when to use which component, with what configuration, and until what conditions are being satisfied.As a first step, the instantaneous version of a constrained-based optimization approach to task-level control will be provided. Following steps will extend the instantaneous idea towards non-instantaneous tasks. This extension must be focused on tasks that require realtime performance, since non-realtime solutions are provided by other projects, such as ROS.

Contributors to this part of the Roadmap need not be RTT developers, but domain experts that also happen to be average users of the RTT infrastructure! They will open up the functionalities of Orocos to the normal end-user.

Tooling

More and improved tools have been a major feature of the 2.x evolution. The major tooling effort for 3.x, will be to bring the above-mentioned component model into the Eclipse eco-system.The first efforts in this direction have started, in the context of the European project BRICS.

Contributors to this part of the Roadmap need not be RTT developers, but programmers familiar with the advanced Eclipse features, such as ecore models, EMG, etc.

European Robotics Forum 2011 Workshop on the Orocos Toolchain

At the European Robotics Forum 2011 Intermodalics, Locomotec and K.U.Leuven are organizing a two-part seminar, appealing to both industry and research institutes, titled:

- Real-Time Robotics with state-of-the-art open source software: case studies (45min presentation, open to all)

- Exploring the Orocos Toolchain (2 hours hands-on, registration required)

The session will be on April 7, 9h00-10h30 + 11h00-12h30

| Remaining seats : 0 out of 20 (last update: 06/04/2011) |

Real-Time Robotics with state-of-the-art open source software: case studies

In this presentation, Peter Soetens and Ruben Smits introduce the audience to todays Open Source robotics eco-system. Which are the strong and weak points of existing software ? Which work seamlessly together, and on which operating systems (Windows, Linux, VxWorks,... ) ? We will prove our statements with practical examples from both academic and industrial use cases. This presentation is the result of the long standing experience of the presenters with a open source technologies in robotics applications and will offer the audience leads and insights to further explore this realm.

Exploring the Orocos Toolchain

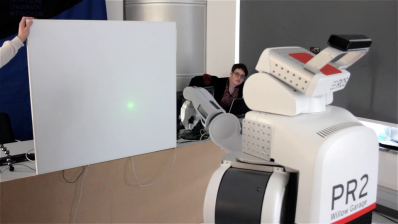

In this hands-on session, the participants are invited to bring their own laptop with Orocos and ROS (optionally) installed. We will support Linux, Mac OS-X and Windows users and will provide instructions on how they can prepare to participate. YouBot: A real and simulated YouBot will be used

YouBot: A real and simulated YouBot will be used

We will let the participants experience that the Orocos toolchain:

- nicely integrates with other popular robotics software

- provides the hooks and extensions to allow application supervision

- gives you the possibility to write expressive, compact, yet real-time applications

- makes 'separation of concerns' in software a natural habit for the programmer

- allows to easily script an application together and inspect/modify with it easily while running

If you'll be using the bootable USB-sticks, prepared by the organisers, you can skip all installation instructions and directly start the assignment at https://github.com/bellenss/euRobotics_orocos_ws/wiki

If you are attending the hands-on session you can bring your own computer. Depending on you operating system you should install the necessary software using the following installation instructions:

YouBot Demo Setup The workshop will start with making you familiar with the Orocos Toolchain, which does not require the YouBot. The hands-on will continue then on a robot in simulation and on the real hardware. We will use the ROS communication protocol to send instructions to the simulator (Gazebo) or the YouBot. Installing Gazebo is not required, since this simulation will run on a dedicated machine. Documentation on the workshop application and the assignment can be found at https://github.com/bellenss/euRobotics_orocos_ws/wiki.

YouBot Demo Setup The workshop will start with making you familiar with the Orocos Toolchain, which does not require the YouBot. The hands-on will continue then on a robot in simulation and on the real hardware. We will use the ROS communication protocol to send instructions to the simulator (Gazebo) or the YouBot. Installing Gazebo is not required, since this simulation will run on a dedicated machine. Documentation on the workshop application and the assignment can be found at https://github.com/bellenss/euRobotics_orocos_ws/wiki.

Registration

You first need to register for attending the euRobotics Forum. Registration for the workshop is mandatory, but free of charge. For the hands-on session, we will limit the number of participants to 20. The workshop is guided by 6 experienced Orocos users. Please register your participation by sending an email to info at intermodalics dot eu. We will confirm your participation with a short notice. Later-on, you will receive a second email with more details about how to prepare. You should receive this second, detailed email in the week of March, 20, 2011.euRobotics Forum Linux Setup

Toolchain Installation

The installation instructions depend on if you have ROS installed or not.NOTE: ROS is required to participate in the YouBot demo.

With ROS on Ubuntu Lucid/Maverick

Install Diamondback ROS using Debian packages for Ubuntu Lucid (10.04) and Maverick (10.10) or the ROS install scripts, in case you don't run Ubuntu.

- Install the ros-diamondback-orocos-toolchain-ros debian package version 0.3.1 or later:

apt-get install ros-diamondback-orocos-toolchain-rosAfter this step, proceed to Section 2: Workshop Sources below.

With ROS on Debian, Fedora or other systems

- We did not succeed in releasing the Diamondback 0.3.0 binary packages for your target of the Orocos Toolchain. This means that you need to build this 'stack' yourself with 'rosmake', after you installed ROS (See http://www.ros.org/wiki/diamondback/Installation). This 'rosmake' step may take about 30 minutes to an hour, depending on your laptop.

Instructions after ROS is installed:

source /opt/ros/diamondback/setup.bash mkdir ~/ros cd ~/ros export ROS_PACKAGE_PATH=$HOME/ros:$ROS_PACKAGE_PATH git clone http://git.mech.kuleuven.be/robotics/orocos_toolchain_ros.git cd orocos_toolchain_ros git checkout -b diamondback origin/diamondback git submodule init git submodule update --recursive rosmake --rosdep-install orocos_toolchain_ros

NOTE: setting the ROS_PACKAGE_PATH is mandatory for each shell that will be used. It's a good idea to add the export ROS_PACKAGE_PATH line above to your .bashrc file (or equivalent)..

Without ROS

- Run the bootstrap.sh (version 2.3.1) script in an empty directory.

Workshop Sources

An additional package is being prepared that will contain the workshop files. See euRobotics Workshop Sources.euRobotics Forum Mac OS-X Setup

Toolchain Installation

Due to a dynamic library issue in the current 2.3 release series, Mac OS-X can not be supported during the Workshop. We will make available a bootable USB stick which contains a pre-installed Ubuntu environment containing all necessary packages.

euRobotics Forum Windows Setup

Toolchain Installation

Windows users can participate in the first part of the hands-on where Orocos components are created and used. Pay attention that installing the Orocos Toolchain on Win32 platforms may take a full day in case you are not familiar with CMake, compiling Boost or any other dependency of the RTT and OCL.Requirements:

- Visual Studio 2005 or 2008

- CMake 2.6.3 or newer (2.8.x works too)

- Boost C++ 1.40.0

- Readline for Windows (see Taskbrowser with readline on Windows)

- Cygwin installation (default setup is fine)

See the Compiling on Windows with Visual Studio wiki page for instructions. The TAO/Corba part is not required to participate in the workshop.

You need to follow the instructions for RTT/OCL v2.3.1 or newer, which you can download from the Orocos Toolchain page. We recommend to build for Release.

In case you have no time nor the experience to set this up, we provide bootable USB sticks that contain Ubuntu Linux with all workshop files.

Workshop Sources

An additional package is being prepared that will contain the workshop files. See euRobotics Workshop Sources for downloading the sources.Windows users might also install the Kst program which is a KDE plot program that also runs on Linux. We provided a .kst file for plotting the workshop data. See the Kst download page.

Testing Your Setup

In case you completed building and installing RTT and OCL, you can launch a cygwin or cmd.exe prompt and run the orocreate-pkg script to create a new package, which is in your c:\orocos\bin directory. Make sure that your PATH variable is propertly extended withset PATH=%PATH%;c:\orocos\bin;c:\orocos\lib;c:\orocos\lib\orocos\win32;c:\orocos\lib\orocos\win32\plugins

You repeat the classical CMake steps with this package, generate the Solution file and build and install it. Then start up the deployer with the deployer-win32.exe program and type 'ls'. It should start and show meaningful information. If you see strange characters in the output, you need to turn of the colors with the '.nocolors' command at the Deployer's prompt.

euRobotics Workshop Material

The euRobotics Forum workshop on Orocos has been a great success. About 30 people attended and participated in the hands-on workshop. The Real-Time & Open Source in Robotics track drew more than 60 people. Both tracks were overbooked.

You can find all presentation material in PDF form below

euRobotics Workshop Sources

There are two ways you can get the sources for the workshop:

- Using Git

- Using a zip file

Since the sources are still evolving, it might be necessary to update your version before the workshop.

Hello World application

The first, ROS-independent part, uses the classical hello-world examples from the rtt-exercises package.You can either check it out with

mkdir ~/ros cd ~/ros git clone git://gitorious.org/orocos-toolchain/rtt_examples cd rtt_examples/rtt-exercises

Or you can download the examples from here. You need at least version 2.3.1 of the exercises.

If you're not using ROS, you can download/unzip it in another directory than ~/ros.

Youbot demo application

The hands-on session involves working on a demo application with a YouBot robot. The application allows you to

- Drive the YouBot around based on pose estimates using laser scan measurements

- Simulate the YouBot on your own computer

The Youbot demo application is available on https://github.com/bellenss/euRobotics_orocos_ws (this is still work in progress and will be updated regularly)

You can either check it out with

mkdir ~/ros export ROS_PACKAGE_PATH=\$ROS_PACKAGE_PATH:$HOME/ros cd ~/ros git clone http://robotics.ccny.cuny.edu/git/ccny-ros-pkg/scan_tools.git git clone http://git.mech.kuleuven.be/robotics/orocos_bayesian_filtering.git git clone http://git.mech.kuleuven.be/robotics/orocos_kinematics_dynamics.git git clone git://github.com/bellenss/euRobotics_orocos_ws.git roscd youbot_supervisor rosmake --rosdep-install

Check that ~/ros is in your ROS_PACKAGE_PATH environment variable at all times, by also adding the export line above to your .bashrc file.

Testing your setup

Here are some instructions to see if you're running a system usable for the workshop.Non-ROS users

You have built RTT with the 'autoproj' tool. This tool generated an 'env.sh' file. You need to source that file in each terminal where you want to build or run an Orocos application:cd orocos-toolchain source env.sh

Next, cd to the rtt-exercises directory that you unpacked, enter hello-1-task-execution, and type make:

cd rtt-exercises-2.3.0/hello-1-task-execution make all cd build ./HelloWorld-gnulinux

ROS users

You have built RTT from the orocos_toolchain_ros package. Make sure that you source the /opt/ros/diamondback/setup.bash script and that the unpacked exercises are under a directory of the ROS_PACKAGE_PATH:cd rtt-exercises-2.3.0 source /opt/ros/diamondback/setup.bash export ROS_PACKAGE_PATH=\$ROS_PACKAGE_PATH:\$(pwd)

Next, you proceed with going into an example directory and type make:

cd hello-1-task-execution make all ./HelloWorld-gnulinux

Testing the YouBot Demo

After you have built the youbot_supervisor, you can test the demo by opening two consoles and do in them:

First console:

roscd youbot_supervisor

./simulation.shSecond console:

roscd youbot_supervisor ./changePathKst (you only have to do this once after installation) kst plotSimulation.kst

If you do not have 'kst', install it with sudo apt-get install kst kst-plugins

European Robotics Forum 2012: workshops

At the European Robotics Forum 2012 KU Leuven and Intermodalics are organizing a three-part seminar, appealing to both industry and research institutes, titled:

- Introduction to state charts and reusable, modular task specification through the Orocos eco-system

- Hands-on1: getting started with state charts in the Orocos eco-system

- Hands-on2: getting started with instantaneous motion specification using constraints (iTaSC): reusable and modular task specification

The sessions will be on March 6, 8h30-10h30 + 11h00-12h30 + 13h30-15h00 (Track four). For more detail consult the European Robotics Forum program

| Remaining seats: (last update: March 2, 2012) |

We're fully booked, but don't be shy to come and peek or sit along, although we can't guarantee you a table or a chair !

(Information on last year's workshop can be found here.)

Registration

You first need to register for attending the euRobotics Forum. Registration for the workshop is mandatory, but free of charge. For the hands-on sessions (hands-on 1 and hands-on 2), we will limit the number of participants to 20. The workshops are guided by different experienced Orocos users. Please register your participation by sending an email to info at intermodalics dot eu indicating which workshops you want to attend. We will confirm your participation with a short notice. Later-on, you will receive a second email with more details about how to prepare. You should receive this second, detailed email in the week of February, 27, 2012.Motivation and objective

The workshop consists of three rather independent parts. It is advised but not required to follow the preceding session(s) when attending session two or three.- The first session is a presentation session, it introduces the basic concepts of Orocos application programming, followed by rFSM state charts and the iTaSC framework.

- The second session is a hands-on session, that aims at making the participants familiar with rFSM state charts, which is a powerful though easy to use tool for robotic coordination and supervision tasks,

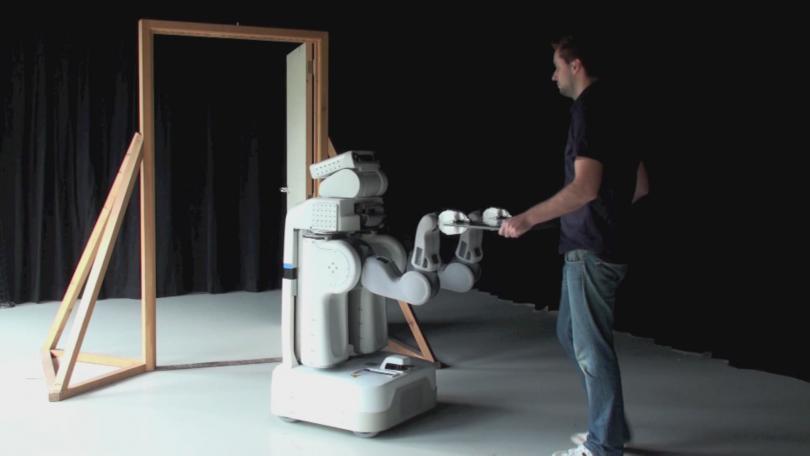

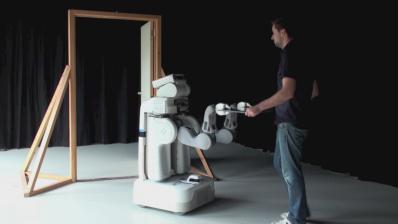

- The third sessions is also a hands-on session, that aims at introducing the concepts of constraint-based motion specification using the iTaSC framework. This framework and its software implementation was developed at the KU Leuven during the past years. It's key advantages are the composability of (partial) constraints and reusability of the constraint specification. The software is an open-source project, which has recently reached its 2.0 version.

Approach

- Presentation session, giving a high-level overview of rFSM and iTaSC by introducing the key concepts.

- Hands-on session: guided exercise where the participants will have to create an application with interacting state machines, that can be used for example to coordinate the behavior of the iTaSC application of the following session.

- Hands-on session: guided exercise where the participants will have to create an application consisting of multiple tasks on a robot in simulation. Eg. Drawing a figure on a table and avoiding a moving obstacle with a Kuka Youbot.

Feedback form

Participant feedback is gratefully appreciated. Please fill in the feedback form. Some browsers/pdf viewers do not support in-browser usage of the form. To avoid problems, please download the form first.Presentations

- Session 3

- Session 1:

| Attachment | Size |

|---|---|

| RTT-Overview.pdf | 1.67 MB |

| erf_itasc_theory_opt.pdf | 526.82 KB |

Installation instructions

Ubuntu Installation with ROS

Installation

- Install Electric ROS using Debian packages for Ubuntu Lucid (10.04) or later. In case you don't run Ubuntu you can use the ROS install scripts. See the ros installation instructions.

- Make sure the following debian packages are installed: ros-electric-rtt-ros-integration ros-electric-rtt-ros-comm ros-electric-rtt-geometry ros-electric-rtt-common-msgs ros-electric-pr2-controllers ros-electric-pr2-simulator ruby

- Create a directory in which you want to install all the workshops source (for instance erf)

mkdir ~/erf- Add this directory to your $ROS_PACKAGE_PATH

export ROS_PACKAGE_PATH=~/erf:$ROS_PACKAGE_PATH

- Get rosinstall

sudo apt-get install python-setuptools sudo easy_install -U rosinstall

- Get the workshop's rosinstall file . Save it as erf.rosinstall in the erf folder.

- Run rosinstall

rosinstall ~/erf erf.rosinstall /opt/ros/electric

- As the rosinstall tells you source the setup script

source ~/erf/setup.bash

- Install all dependencies (ignore warnings)

rosdep install itasc_examples rosdep install rFSM

- Compile the workshop sources

rosmake itasc_examples

Setup

- Add the following functions in your $HOME/.bashrc file:

useERF(){ source $HOME/erf/setup.bash; source $HOME/erf/setup.sh; source /opt/ros/electric/stacks/orocos_toolchain/env.sh; setLUA; } setLUA(){ if [ "x$LUA_PATH" == "x" ]; then LUA_PATH=";;"; fi if [ "x$LUA_CPATH" == "x" ]; then LUA_CPATH=";;"; fi export LUA_PATH="$LUA_PATH;`rospack find rFSM`/?.lua" export LUA_PATH="$LUA_PATH;`rospack find ocl`/lua/modules/?.lua" export LUA_PATH="$LUA_PATH;`rospack find kdl`/?.lua" export LUA_PATH="$LUA_PATH;`rospack find rttlua_completion`/?.lua" export LUA_PATH="$LUA_PATH;`rospack find youbot_master_rtt`/lua/?.lua" export LUA_PATH="$LUA_PATH;`rospack find kdl_lua`/lua/?.lua" export LUA_CPATH="$LUA_CPATH;`rospack find rttlua_completion`/?.so" export PATH="$PATH:`rosstack find orocos_toolchain`/install/bin" } useERF

Running the demo

Gazebo simulation

- Open a terminal and go to the itasc_erf_2012 package:

roscd itasc_erf2012_demo/- Run the script that starts the gazebo simulator (and two translator topics to communicate with the itasc code)

./run_gazebo.sh

- Open another terminal and go to the itasc_erf_2012 package:

roscd itasc_erf2012_demo/- Run the script that starts the itasc application

./run_simulation.sh

Real youbot

- Make sure that you are connected to the real youbot.

- Open another terminal and go to the itasc_erf_2012 package:

roscd itasc_erf2012_demo/- Check the name of the network connection with the robot (for instance eth0) and put this connection name in the youbot_driver cpf file (cpf/youbot_driver.cpf)

- Run the script that starts the itasc application

./run.sh

KDL-Examples

Some small examples for usage.

Do not hesitate to add your own small examples.

rfsm-session

Additonal Information on the Practical Session

Documentation Links

- rFSM documentation

- rFSM cheatsheet

- OROCOS RTT-Lua Cookbook including rFSM recipies.

- minimal rFSM - RTT example

Other

- Markus' slides (see below)

| Attachment | Size |

|---|---|

| pres.pdf | 378.19 KB |

Geometric relations semantics

Remark that this wiki contains a summary of the theoretical article and the software article both published as a tutorial for IEEE Robotics and Automation Magazine:

|

The geometric relations semantics software (C++) implements the geometric relation semantics theory, hereby offering support for semantic checks for your rigid body relations calculations. This will avoid commonly made errors, and hence reduce application and, especially, system integration development time considerably. The proposed software is to our knowledge the first to offer a semantic interface for geometric operation software libraries.

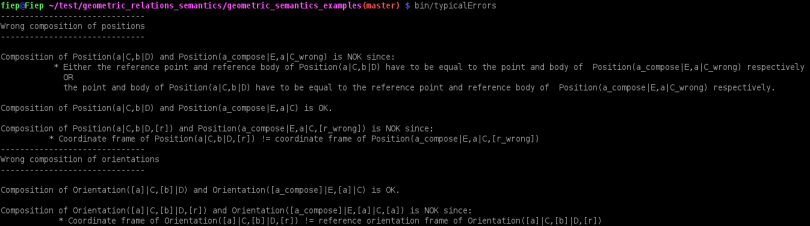

The screenshot below shows the output of the semantic checks of the (wrong) composition of two positions and two orientations.

Output of the semantic checks of the (wrong) composition of two positions and two orientations

Output of the semantic checks of the (wrong) composition of two positions and two orientations

The goal of the software is to provide semantic checking for calculations with geometric relations between rigid bodies on top of existing geometric libraries, which are only working on specific coordinate representations. Since there are already a lot of libraries with good support for geometric calculations on specific coordinate representations (The Orocos Kinematics and Dynamics library, the ROS geometry library, boost, ...) we do not want to design yet another library but rather will extend these existing geometric libraries with semantic support. The effort to extend an existing geometric library with semantic support is very limited: it boils down to the implementation of about six function template specializations.

What is it?

This wiki contains a summary of the article accepted as a tutorial for IEEE Robotics and Automation Magazine on the 4th June 2012.

Rigid bodies are essential primitives in the modelling of robotic devices, tasks and perception, starting with the basic geometric relations such as relative position, orientation, pose, translational velocity, rotational velocity, and twist. This wiki elaborates on the background and the software for the semantics underlying rigid body relationships. This wiki is based on the research of the KU Leuven robotics group, in this case mainly conducted by Tinne De Laet, to explain semantics of all coordinate-invariant properties and operations, and, more importantly, to document all the choices that are made in coordinate representations of these geometric relations. This resulted in a set of concrete suggestions for standardizing terminology and notation, and software with a fully unambiguous software interface, including automatic checks for semantic correctness of all geometric operations on rigid-body coordinate representations.

The geometric relations semantics software prevents commonly made errors in geometric rigid-body relations calculations like:

- Logic errors in geometric relation calculations: A lot of logic errors can occur during geometric relation calculations. For instance (there is no need to understand the details just have a look at the difference in syntax), the inverse of $\textrm{Position} \left(e|\mathcal{C}, f |\mathcal{D}\right)$ is $\textrm{Position} \left(f|\mathcal{D}, e |\mathcal{C}\right)$, while the inverse of the translational velocity $\textrm{TranslationVelocity} \left(e|\mathcal{C}, \mathcal{D}\right)$ is $\textrm{TranslationVelocity} \left(e|\mathcal{D}, \mathcal{C}\right)$. When using the semantic representation proposed in this paper, the semantics of the inverse geometric relation can be automatically derived from the forward geometric relation, preventing logic errors. A second example emerges when composing the relations involving three rigid bodies: in order to get the geometric relation of $\mathcal{C}$ with respect to body $\mathcal{D}$ one can compose the geometric relation between $\mathcal{C}$ and third body $\mathcal{E}$ with the geometric relation between body $\mathcal{E}$ and the body $\mathcal{D}$ (and not the geometric relation between the body $\mathcal{D}$ and the body $\mathcal{E}$ for instance). Such a logic constraint can be checked easily by including, for instance, the body and reference body in the semantic representation of the geometric relations.

- Composition of twists with different velocity reference point: Composing twists requires a common velocity reference point (i.e. the twists have to express the translational velocity of the same point on the body). By including the velocity reference point of the twist in the semantic representation, this constraint can be checked explicitly.

- Composition of geometric relations expressed in different coordinate frames: Composing geometric relations using coordinate representations like position vectors, translational and rotational velocity vectors, and 6D vector twists, requires that the coordinates are expressed in the same coordinate frame. By including the coordinate frame in the coordinate semantic representation of the geometric relations, this constraint can be checked explicitly.

- Composition of poses and orientation coordinate representations in wrong order: The rotation matrix and homogeneous transformation matrix coordinate representations can be composed using simple multiplication. Since matrix multiplication is however not commutative, a common error is to use a wrong multiplication order in the composition. The correct multiplication order can however be directly derived when including the bodies, frames, and points in the coordinate semantic representation of the geometric relations.

- Integration of twists when velocity reference point and coordinate frame do not belong to same frame: A twist can only be integrated when it expresses the translational velocity of the origin of the coordinate frame the twist is expressed in. When including the velocity reference point and the coordinate frame in the coordinate semantic representation of the twist, this constraint can be explicitly checked.

Background

This wiki contains a summary of the article accepted as a tutorial for IEEE Robotics and Automation Magazine on the 4th June 2012.

Background and terminology

A rigid body is an idealization of a solid body of infinite or finite size in which deformation is neglected. We often abbreviate “rigid body” to “body”, and denotes it by the symbol $\mathcal{A}$. A body in three-dimensional space has six degrees of freedom: three degrees of freedom in translation and three in rotation. The subspace of all body motions that involve only changes in the orientation is often denoted by SO(3) (the Special Orthogonal group in three-dimensional space). It forms a group under the operation of composition of relative motion. The space of all body motions, including translations, is denoted by SE(3) (the Special Euclidean group in three-dimensional space).

A general six-dimensional displacement between two bodies is called a (relative) pose: it contains both the position and orientation. Remark that the position, orientation, and pose of a body are not absolute concepts, since they imply a second body with respect to which they are defined. Hence, only the relative position, orientation, and pose between two bodies are relevant geometric relations.

A general six-dimensional velocity between two bodies is called a (relative) twist: it contains both the rotational and the translational velocity. Similar to the position, orientation, and pose, the translational velocity, rotational velocity, and twist of a body are not absolute concepts, since they imply a second body with respect to which they are defined. Hence, only the relative translational velocity, rotational velocity, and twist between two bodies are relevant geometric relations.

When doing actual calculations with the geometric relations between rigid bodies, one has to use the coordinate representation of the geometric relations, and therefore has to choose a coordinate frame in which the coordinates are expressed in order to obtain numerical values for the geometric relations.

Semantics

Geometric primitives

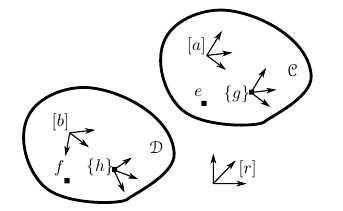

The geometric relations between bodies are described using a set of geometric primitives:- A (spatial) point is the primitive to represent the position of a body. Points have neither volume, area, length, nor any other higher dimensional analogue. We denote points by the symbols $a$, $b$, ...

- A vector is the geometric primitive that connects a point $a$ to a point $b$. It has a magnitude (the straight-line distance between the two points), and a direction (from $a$ to $b$). To express the magnitude of a vector, a (length) scale must be chosen.

- An orientation frame represents an orientation, by means of three orthonormal vectors indicating the frame’s X-axis $X$, Y-axis $Y$ , and Z-axis $Z$. We denote orientation frames by the symbols $\left[a\right]$, $\left[b\right]$, ...

- A (displacement) frame represents position and orientation of a body, by means of an orientation frame and a point (which is the orientation frame’s origin). We denote frames by the symbols $\left\{a\right\}$, $\left\{b\right\}$, ...

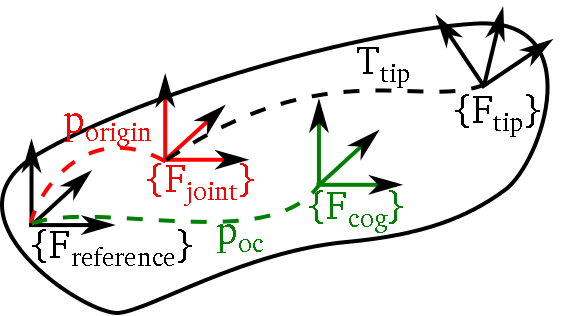

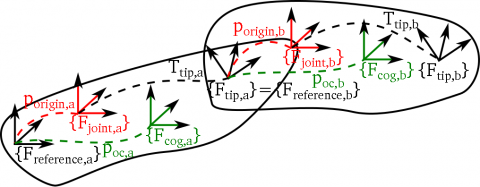

Each of these geometric primitives can be fixed to a body, which means that the geometric primitive coincides with the body not only instantaneously, but also over time. For the point $a$ and the body $\mathcal{C}$ for instance, this is written as $a|\mathcal{C}$. The figure below presents the geometric primitives body, point, vector, orientation frame, and frame graphically.

Geometric Primitives

Geometric Primitives

Geometric relations

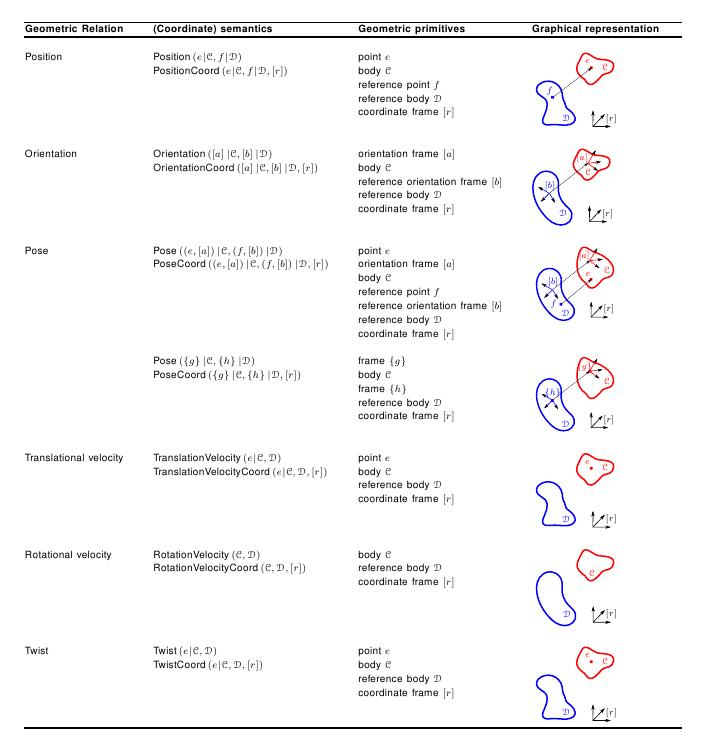

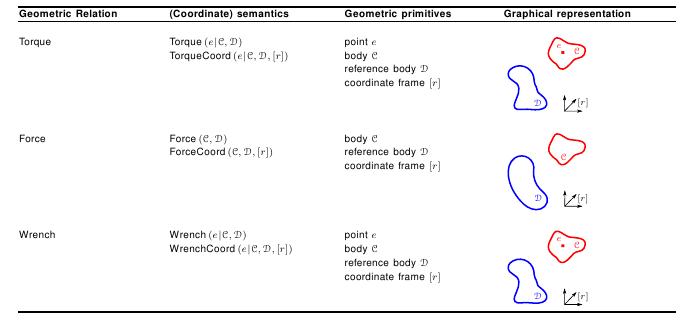

The table below summarizes the semantics for the following geometric relations between rigid bodies: position, orientation, pose, translational velocity, rotational velocity, and twist.

Geometric relations

Geometric relations

Force, Torque, and Wrench

Screw theory, the algebra and calculus of pairs of vectors that arise in the kinematics and dynamics of rigid bodies, shows the duality between wrenches, consisting of the torque and force vectors, and twists, consisting of translational and rotational velocity vectors. The parallelism between translational, rotational velocity, and twist on the one hand, and torque, force, and wrench on the other hand, is directly reflected in the semantic representation (see the table below) and the coordinate representations. Geometric relations force, torque, and wrench

Geometric relations force, torque, and wrench

Design

- The design idea

- The design

- The software structure and content

- Core library: geometric_semantics

- KDL support: geometric_semantics_kdl

- ROS tf support: geometric_semantics_tf

- ROS messages: geometric_semantics_msgs

- ROS message conversions: geometric_semantics_msgs_conversions

- ROS tf messages support: geometric_semantics_tf_msgs

- ROS tf message conversions: geometric_semantics_tf_msgs_conversions

- Examples: geometric_semantics_examples

The software implements the geometric relation semantics, hereby offering support for semantic checks for your rigid body relations. This will avoid commonly made errors, and hence reduce application (and, especially, system integration) development time considerably. The proposed software is to our knowledge the first to offer a semantic interface for geometric operation software libraries.

The design idea

The goal of the geometric_relations_semantics library is to provide semantic checking for calculations with geometric relations between rigid bodies on top of existing geometric libraries, which are only working on specific coordinate representations. Since there are already a lot of libraries with good support for geometric calculations on specific coordinate representations (The Orocos Kinematics and Dynamics library, the ROS geometry library, boost, ...) we do not want to design yet another library but rather will extend these existing geometric libraries with semantic support. The effort to extend an existing geometric library with semantic support is very limited: it boils down to the implementation of about six function template specializations.For the semantic checking, we created the (templated) geometric_semantics core library, providing all the necessary semantic support for geometric relations (relative positions, orientations, poses, translational velocities, rotational velocities, twists, forces, torques, and wrenches) and the operations on these geometric relations (composition, integration, inversion, ...).

If you want to perform actual geometric relation calculations, you will need particular coordinate representations (for instance a homogeneous transformation matrix for a pose) and a geometric library offering support for calculations on these coordinate representations (for instance multiplication of homogeneous transformation matrices). To this end, you can build your own library depending on the geometric_semantics core library in which you implement a limited number of functions, which make the connection between semantic operations (for instance composition) and actual coordinate representation calculations (for instance multiplication of homogeneous transformation matrices). We already provide support for two geometric libraries: the Orocos Kinematics and Dynamics library and the ROS geometry library, in the geometric_semantics_kdl and geometric_semantics_tf libraries, respectively.

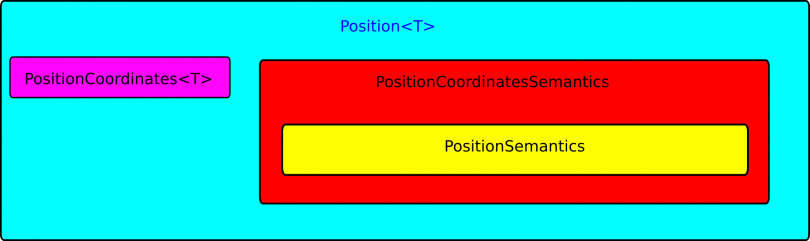

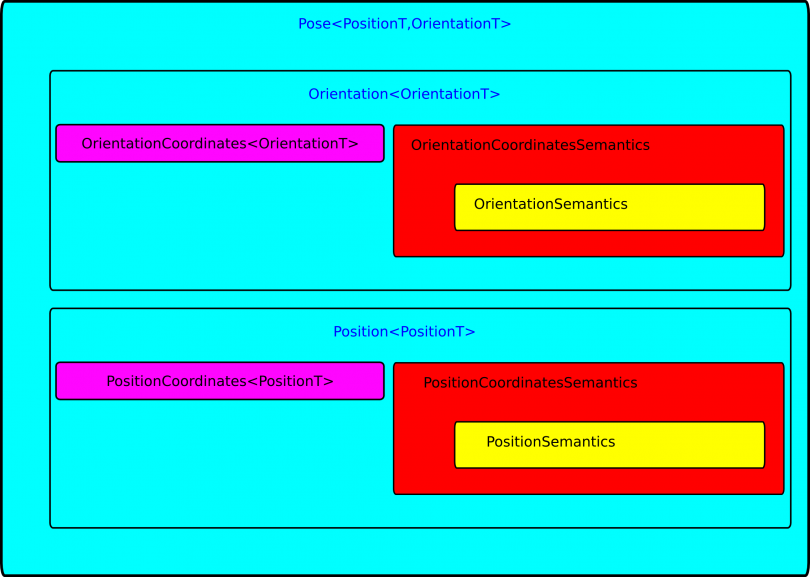

The design

For every geometric relation (position, orientation, pose, translational velocity, rotational velocity, twist, force, torque, and wrench) the geometric_semantics library contains four classes. Here we will explain the design with the position geometric relation, but all other geometric relations have a similar design. For the position geometric relation there are four classes:- PositionSemantics: This class contains the semantics of the (coordinate-free) Position geometric relation. For instance in this case it contains the information on the point, reference point, body, and reference body.

- PositionCoordinatesSemantics: This class contains a PositionSemantics object of the geometric relation at hand and the extra semantic information needed for semantics of position coordinate geometric relation, i.e. the coordinate frame in which the coordinates are expressed.

- PositionCoordinates: This templated class contains the actual coordinate representation of the geometric relation, for instance a position vector for the position geometric relation. The template is the actual geometry object (of an external library) you will use as a coordinate representation, for instance a KDL::Vector.

- Position: This templated class is a composition of a PositionCoordinatesSemantics object and a PositionCoordinates object. In case you want both semantic support and want to do actual geometric calculations, this is the level you will work at.

Again, the template is the actual geometry (of an external library) you will use as a coordinate representation, for instance a KDL::Vector.

The above described design is illustrated by the figure below.  Position geometric relation design

Position geometric relation design

Remark that all four of the above 'levels' are of actual use:

- PositionSemantics: to do coordinate-free semantic checking (without actual geometric calculations);

- PositionCoordinateSemantics: to do semantic checking involving coordinate systems (without actual geometric calculations);

- PositionCoordinates: to do the actual geometric calculations; and

- Position: to do both semantic checking and the actual geometric calculations.

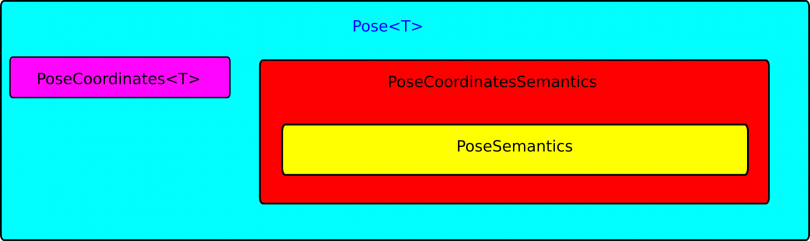

Pose, Twist, and Wrench

We need to give some extra information on the pose, twist, and wrench geometric relations since they can be represented as a composition of two other geometric relations (Pose = Position + Orientation, Twist = TranslationalVelocity + RotationalVelocity, Wrench = Force + Torque) or as a new geometric relation. For example we could want to use a homogeneous transformation matrix as a coordinate representation of a pose, and in this case we would also want, for efficiency reasons, to do direct calculations on the homogeneous transformation matrices. In another case we want to represent the pose as the composition of a position (with for instance a position vector as a coordinate representation) and an orientation (with for instance Euler angles as a coordinate representation). The software allows both designs as illustrated in the two figures below. Pose geometric relation design as a basic geometric relation

Pose geometric relation design as a basic geometric relation  Pose geometric relation design as an composition of a Position and Orientation geometric relation

Pose geometric relation design as an composition of a Position and Orientation geometric relation

The software structure and content

Core library: geometric_semantics

KDL support: geometric_semantics_kdl

ROS tf support: geometric_semantics_tf

ROS messages: geometric_semantics_msgs

ROS message conversions: geometric_semantics_msgs_conversions

ROS tf messages support: geometric_semantics_tf_msgs

ROS tf message conversions: geometric_semantics_tf_msgs_conversions

Examples: geometric_semantics_examples

API

The API is available at: http://people.mech.kuleuven.be/~tdelaet/geometric_relations_semantics/doc/.

Quick start

Overview

The framework is ordered following a OROCOS-ROS approach and consists of one stack:- geometric_relations_semantics.

This stack consists of following packages:

- geometric_semantics: geometric_semantics is the core of the geometric_relations_semantics stack and provides c++ code for the semantic support of geometric relations between rigid bodies (relative position, orientation, pose, translational velocity, rotational velocity, twist, force, torque, and wrench). If you want to use semantic checking for the geometric relation operations between rigid bodies in your application you can check the geometric_semantics_examples package. If you want to create support for your own geometry types on top of the geometric_semantics package, the geometric_semantics_kdl provides a good starting point.

- geometric_semantics_examples: geometric_semantics_examples groups some examples showing how the geometric_semantics can be used to provide semantic checking for the geometric relations between rigid bodies in your application.

- geometric_semantics_orocos_typekit: geometric_semantics_orocos_typekit provides Orocos typekit support for the geometric_semantics types, such that the geometric semantics types are visible within Orocos (in the TaskBrowser component, in Orocos script, reporting, reading and writing to files (for instance for properties), ... ).

- geometric_semantics_orocos_typekit_kdl: geometric_semantics_orocos_typekit_kdl provides Orocos typekit support for geometric semantics coordinate representations using KDL types.

- geometric_semantics_msgs: geometric_semantics_msgs provides ROS messages matching the C++ types defined on the geometric_semantics package, in order to support semantic support during message based communication.

- geometric_semantics_msgs_conversions: geometric_semantics_msgs_conversions provides support conversions between geometric_semantics_msgs and the C++ geometric_semantics types defined on the geometric_semantics package.

- geometric_semantics_msgs_kdl: geometric_semantics_kdl provides support for orocos_kdl types on top of the geometric_semantics package (for instance KDL::Frame to represent the relative pose of two rigid bodies). If you want to create support for your own geometry types on top of the geometric_semantics package, this package provides a good starting point.

- geometric_semantics_msgs_tf: geometric_semantics_tf provides support for tf datatypes (see http://www.ros.org/wiki/tf/Overview/Data%20Types) on top of the geometric_semantics package (for instance tf::Pose to represent the relative pose of two rigid bodies).

- geometric_semantics_tf_msgs: geometric_semantics_tf_msgs provides ROS messages matching the C++ types defined on the geometric_semantics_tf package, in order to support semantic support for tf types during message based communication.

- geometric_semantics_tf_msgs_conversions: geometric_semantics_tf_msgs_conversions provides support conversions between geometric_semantics_tf_msgs and the C++ geometric_semantics_tf types defined on the geometric_semantics_tf package.

Each package contains the following subdirectories:

- src/ containing the source code of the components (mainly C++ or python for the ROS msgs support).

Installation instructions

Warning, so far we only provide support for linux-based systems. For Windows or Mac, you're still at your own, but we are always interested in your experiences and in extensions of the installation instructions, quick start guide, and user guide.Dependencies

- Boost version 1.42 or up (for ubuntu you can use the libboost1.4*-dev libraries)

- Orocos Kinematics and Dynamics Library 0.2.3 and up. For more information visit the Orocos website

- rtt_geometry stack: the Orocos typekit support for KDL types, introduces a dependency on the kdl_typekit

- ROS Electric/Fuerte/Groovy: optional unless you want to use the PR2, but highly recommended: build system and tools like rospack find are practical and are used in the example scripts

- ros_comm

- geometry

- common_msgs

Compiling from source

- First you should get the sources from git using:

git clone https://gitlab.mech.kuleuven.be/rob-dsl/geometric-relations-semantics.git

- Go into the geometric_relations_semantics directory using:

cd geometric_relations_semantics

- Add this directory to your ROS_PACKAGE_PATH environment variable using:

export ROS_PACKAGE_PATH=$PWD:$ROS_PACKAGE_PATH

- Install the dependencies and build the library using:

rosdep install geometric_relations_semantics rosmake geometric_relations_semantics

- Everything should compile out of the box, and you are now ready to start using geometric relation semantics support.

- If you want to run the test of the packages you should use for instance (for the geometric_semantics core package):

roscd geometric_semantics make test

Setup

It is strongly recommended that you add the geometric_relations_semantics directory to your ROS_PACKAGE_PATH in your .bashrc file.(Re)building the stack or individual packages

- To build the geometric_relations_semantics stack use:

rosmake geometric_relations_semantics

- You can also (re)build any package individually using:

rosmake PACKAGE_NAME

Running the tests

- If you want to run the test of the packages you should for go to the package for instance (for the geometric_semantics core package):

roscd geometric_semantics

- And next make and run the tests:

make test

User guide

If you are looking for installation instructions you should read the quick start.

Setting the build options of the core library

You can customize the behavior of the semantic checking (checking or not, and screen output or not) by changing the build options of the geometric_semantics library (see CMakeLists.txt of geometric_semantics package)- add_definitions(-DCHECK): when using this build flag, the semantic checking will be enabled.

- add_definitions(-DOUTPUT_CORRECT): when using this build flag, you will get screen output for operations that are semantically correct.

- add_definitions(-DOUTPUT_WRONG): when using this build flag, you will get screen output for operations that are semantically wrong.

Using the geometric relations semantics in your own application

Here we will explain how you can use the geometric relations semantics in your application, in particular using the Orocos Kinematics and Dynamics library as a geometry library, supplemented with the semantic support.

Preparing your own application using the ROS-build system

- Create a new ROS package (in this case with name myApplication), with a dependency on the geometric_semantics_kdl:

roscreate-pkg myApplication geometric_semantics_kdlThis will automatically create a directory with name myApplication and a basic build infrastructure (see the roscreate-pkg documentation)

- Add the newly created directory to your ROS_PACKAGE_PATH environment variable:

cd myApplication export ROS_PACKAGE_PATH=$PWD:$ROS_PACKAGE_PATH

Writing your own application

- Go to the application directory:

roscd myApplication

- Create a main C++ file

touch myApplication.cpp- Edit the C++ file with your favorite editor

- Include the necessary headers. For instance:

#include <Pose/Pose.h> #include <Pose/PoseCoordinatesKDL.h>

- It can be convenient to use the geometric_semantics namespace and for instance the one of your geometry library (in this case KDL):

using namespace geometric_semantics; using namespace KDL;

- In your main you should create the necessary geometric relations. For instance for a pose, first create the KDL coordinates:

Rotation coordinatesRotB2_B1=Rotation::EulerZYX(M_PI,0,0); Vector coordinatesPosB2_B1(2.2,0,0); KDL::Frame coordinatesFrameB2_B1(coordinatesRotB2_B1,coordinatesPosB2_B1)

Then use this KDL coordinates to create a PoseCoordinates object:

PoseCoordinates<KDL::Frame> poseCoordB2_B1(coordinatesFrameB2_B1);

Then create a Pose object using both the semantic information and the PoseCoordinates:

Pose<KDL::Frame> poseB2_B1("b2","b2","B2","b1","b1","B1","b1",poseCoordB2_B1);

- Now you are ready to do actual calculations using semantic checking. For instance to take the inverse:

Pose<KDL::Frame> poseB1_B2 = poseB2_B1.inverse()

Building your own application

- To build you application you should edit the CMakeLists.txt file created in you application directory. Add the your C++ main file to be build as an executable adding the following line:

rosbuild_add_executable(myApplication myApplication.cpp)

- Now you are ready to build, so type

rosmake myApplication

and the executable will be created in the bin directory.

- To run the executable do:

bin/myApplicationYou will get the semantic output on your screen.

Extending your geometry library with semantic checking

Imagine you have your own geometry library with support for geometric relation coordinate representations and calculations with these coordinate representations. You however would like to have semantic support on top of this geometry library. Probably the best thing to do in this case is to mimic our support for the Orocos Kinematics and Dynamics Library. To have a look at it do:

roscd geometric_semantics_kdl/Template specialization

The only thing you have to do is write template specializations. So for instance to get support for KDL::Rotation, which is a coordinate representation for a Orientation geometric relation, you have to write the template specialization for OrientationCoordinates<T>, i.e. OrientationCoordinates<KDL::Rotation>.Semantic constraints invoked by your coordinate representations

The first thing to find out is which semantic constraints are invoked by the particular coordinate representation you use. For instance a KDL::Rotation represents a 3x3 rotation matrix and invokes the semantic constraint that the reference orientation frame is equal to the coordinate frame.The possible semantic constraints are listed in the *Coordinates.h files in the geometric_semantics core library. So for instance for OrientationCoordinates we find there an enumeration of the different possible semantic constraints imposed by Orientation coordinate representations:

/** *\brief Constraints imposed by the orientation coordinate representation to the semantics */ enum Constraints{ noConstraints = 0x00, coordinateFrame_equals_referenceOrientationFrame = 0x01, // constraint that the orientation frame on the reference body has to be equal to the coordinate frame };

You should specify the constraint when writing the template specialization of the OrientationCoordinates<KDL::Rotation>:

// template specialization for KDL::Rotation template <> OrientationCoordinates<KDL::Rotation>::OrientationCoordinates(const KDL::Rotation& coordinates): data(coordinates), constraints(coordinateFrame_equals_referenceOrientationFrame){ };

Specializing other functions to do actual coordinate calculations

The other function template specializations specify the actual coordinate calculations that have to be performed for semantic operations like inverse, changing the coordinate frame, changing the orientation frame, ... For instance, to specialize the inverse for KDL::Rotation coordinate representations:

template <> OrientationCoordinates<KDL::Rotation> OrientationCoordinates<KDL::Rotation>::inverse2Impl() const { return OrientationCoordinates<KDL::Rotation>(this->data.Inverse()); }

Tutorials

Setting up a package and the build system for your application

This tutorial explains (one possibility) to set up a build system for your application using the geometric_relations_semantics. The possibility we explain uses the ROS package and build infrastructure, and will therefore assume you have ROS installed and set up on your computer.

- Create a new ROS package (in this case with name myApplication), with a dependency on the geometric_semantics library and for instance the geometric_semantics_kdl library:

roscreate-pkg myApplication geometric_semantics geometric_semantics_kdl

This will automatically create a directory with name myApplication and a basic build infrastructure (see the roscreate-pkg documentation)

- Add the newly created directory to your ROS_PACKAGE_PATH environment variable:

export ROS_PACKAGE_PATH=myApplication:$ROS_PACKAGE_PATH

Your first application using semantic checking on geometric relations (without coordinate checking)

This tutorial assumes you have prepared a ROS package with name myApplication and that you have set your ROS_PACKAGE_PATH environment variable accordingly, as explained in this tutorial.

In this tutorial we first explain how you can create basic semantic objects (without coordinates and coordinate checking) and perform semantic operations on them. We will show how you can create any of the supported geometric relations: position, orientation, pose, transational velocity, rotational velocity, twist, force, torque, and wrench.

Remark that the file resulting from following this tutorial is attached to this wiki page for completeness.

Prepare the main file

- Go to the directory of our first application using:

roscd myApplication

- Create a main file (in this tutorial called myFirstApplication.cpp) in which we will put the code of our first application.

touch myFirstApplication.cpp- Edit the C++ file with your favorite editor. For instance:

vim myFirstApplication.cpp- Include the necessary headers.

#include <Position/PositionSemantics.h> #include <Orientation/OrientationSemantics.h> #include <Pose/PoseSemantics.h> #include <LinearVelocity/LinearVelocitySemantics.h> #include <AngularVelocity/AngularVelocitySemantics.h> #include <Twist/TwistSemantics.h> #include <Force/ForceSemantics.h> #include <Torque/TorqueSemantics.h> #include <Wrench/WrenchSemantics.h>

- Next we use the geometric_semantics namespace for convenience:

using namespace geometric_semantics;

- Create a main program:

int main (int argc, const char* argv[]) { // Here comes the code of our first application }

Building your first application

- To build you application you should edit the CMakeLists.txt file created in your application directory.

vim CMakeLists.txt- Add the C++ main file to be build as an executable by adding the following line:

rosbuild_add_executable(myFirstApplication myFirstApplication.cpp)

- Now you are ready to build, so type

rosmake myApplication

and the executable will be created in the bin directory.

- To run the executable do:

bin/myFirstApplicationYou will get the semantic output on your screen.

Creating the geometric relations semantics

- We will start with creating the geometric relation semantics objects for the relation between body C with point a and orientation frame [e], and body D with point b and orientation frame [f]:

// Creating the geometric relations semantics PositionSemantics position("a","C","b","D"); OrientationSemantics orientation("e","C","f","D"); PoseSemantics pose("a","e","C","b","f","D"); LinearVelocitySemantics linearVelocity("a","C","D"); AngularVelocitySemantics angularVelocity("C","D"); TwistSemantics twist("a","C","D"); TorqueSemantics torque("a","C","D"); ForceSemantics force("C","D"); WrenchSemantics wrench("a","C","D");

Doing semantic operations

- We can for instance take the inverses of the created semantic geometric relations by:

//Doing semantic operations with the geometric relations // inverting PositionSemantics positionInv = position.inverse(); OrientationSemantics orientationInv = orientation.inverse(); PoseSemantics poseInv = pose.inverse(); LinearVelocitySemantics linearVelocityInv = linearVelocity.inverse(); AngularVelocitySemantics angularVelocityInv = angularVelocity.inverse(); TwistSemantics twistInv = twist.inverse(); TorqueSemantics torqueInv = torque.inverse(); ForceSemantics forceInv = force.inverse(); WrenchSemantics wrenchInv = wrench.inverse();

std::cout << "-----------------------------------------" << std::endl; std::cout << "Inverses: " << std::endl; std::cout << " " << positionInv << " is the inverse of " << position << std::endl; std::cout << " " << orientationInv << " is the inverse of " << orientation << std::endl; std::cout << " " << poseInv << " is the inverse of " << pose << std::endl; std::cout << " " << linearVelocityInv << " is the inverse of " << linearVelocity << std::endl; std::cout << " " << angularVelocityInv << " is the inverse of " << angularVelocity << std::endl; std::cout << " " << twistInv << " is the inverse of " << twist << std::endl; std::cout << " " << torqueInv << " is the inverse of " << torque << std::endl; std::cout << " " << forceInv << " is the inverse of " << force << std::endl; std::cout << " " << wrenchInv << " is the inverse of " << wrench << std::endl;

- Now we can for instance compose the result with their inverses. Mind that the order of composition does not matter, since this is automatically derived from the semantic information in the objects.

//Composing PositionSemantics positionComp = compose(position,positionInv); OrientationSemantics orientationComp = compose(orientation,orientationInv); PoseSemantics poseComp = compose(pose,poseInv); LinearVelocitySemantics linearVelocityComp = compose(linearVelocity,linearVelocityInv); AngularVelocitySemantics angularVelocityComp = compose(angularVelocity,angularVelocityInv); TwistSemantics twistComp = compose(twist,twistInv); TorqueSemantics torqueComp = compose(torque,torqueInv); ForceSemantics forceComp = compose(force,forceInv); WrenchSemantics wrenchComp = compose(wrench,wrenchInv);

std::cout << "-----------------------------------------" << std::endl; std::cout << "Composed objects: " << std::endl; std::cout << " " << positionComp << " is the composition of " << position << " and " << positionInv << std::endl; std::cout << " " << orientationComp << " is the composition of " << orientation << " and " << orientationInv << std::endl; std::cout << " " << poseComp << " is the composition of " << pose << " and " << poseInv << std::endl; std::cout << " " << linearVelocityComp << " is the composition of " << linearVelocity << " and " << linearVelocityInv << std::endl; std::cout << " " << angularVelocityComp << " is the composition of " << angularVelocity << " and " << angularVelocityInv << std::endl; std::cout << " " << twistComp << " is the composition of " << twist << " and " << twistInv << std::endl; std::cout << " " << torqueComp << " is the composition of " << torque << " and " << torqueInv << std::endl; std::cout << " " << forceComp << " is the composition of " << force << " and " << forceInv << std::endl; std::cout << " " << wrenchComp << " is the composition of " << wrench << " and " << wrenchInv << std::endl;

| Attachment | Size |

|---|---|

| myFirstApplication.cpp | 4.28 KB |

Your second application using semantic checking on geometric relations including coordinate checking

This tutorial assumes you have prepared a ROS package with name myApplication and that you have set your ROS_PACKAGE_PATH environment variable accordingly, as explained in this tutorial.

In this tutorial we first explain how you can create basic semantic objects (without coordinates but with coordinate checking) and perform semantic operations on them. We will show how you can create any of the supported geometric relations: position, orientation, pose, transational velocity, rotational velocity, twist, force, torque, and wrench.

Remark that the file resulting from following this tutorial is attached to this wiki page for completeness.

Prepare the main file

- Prepare a mySecondApplication.cpp main file as explained in this tutorial.

- Edit the C++ file with your favorite editor. For instance:

vim mySecondApplication.cpp- Include the necessary headers.

#include <Position/PositionCoordinatesSemantics.h> #include <Orientation/OrientationCoordinatesSemantics.h> #include <Pose/PoseCoordinatesSemantics.h> #include <LinearVelocity/LinearVelocityCoordinatesSemantics.h> #include <AngularVelocity/AngularVelocityCoordinatesSemantics.h> #include <Twist/TwistCoordinatesSemantics.h> #include <Force/ForceCoordinatesSemantics.h> #include <Torque/TorqueCoordinatesSemantics.h> #include <Wrench/WrenchCoordinatesSemantics.h>

- Next we use the geometric_semantics namespace for convenience:

using namespace geometric_semantics;

- Create a main program:

int main (int argc, const char* argv[]) { // Here comes the code of our second application }

Building your second application

- To build you application you should edit the CMakeLists.txt file created in you application directory. Add the your C++ main file to be build as an executable adding the following line:

rosbuild_add_executable(mySecondApplication mySecondApplication.cpp)

- Now you are ready to build, so type

rosmake myApplication

and the executable will be created in the bin directory.

- To run the executable do:

bin/mySecondApplicationYou will get the semantic output on your screen.

Creating the geometric relations coordinates semantics

- We will start with creating the geometric relation coordinates semantics objects for the relation between body C with point a and orientation frame [e], and body D with point b and orientation frame [f], all expressed in coordinate frame [r]:

// Creating the geometric relations coordinates semantics PositionCoordinatesSemantics position("a","C","b","D","r"); OrientationCoordinatesSemantics orientation("e","C","f","D","r"); PoseCoordinatesSemantics pose("a","e","C","b","f","D","r"); LinearVelocityCoordinatesSemantics linearVelocity("a","C","D","r"); AngularVelocityCoordinatesSemantics angularVelocity("C","D","r"); TwistCoordinatesSemantics twist("a","C","D","r"); TorqueCoordinatesSemantics torque("a","C","D","r"); ForceCoordinatesSemantics force("C","D","r"); WrenchCoordinatesSemantics wrench("a","C","D","r");

Doing semantic coordinate operations

- We can for instance take the inverses of the created geometric relation coordinates semantics by:

//Doing semantic operations with the geometric relations // inverting PositionCoordinatesSemantics positionInv = position.inverse(); OrientationCoordinatesSemantics orientationInv = orientation.inverse(); PoseCoordinatesSemantics poseInv = pose.inverse(); LinearVelocityCoordinatesSemantics linearVelocityInv = linearVelocity.inverse(); AngularVelocityCoordinatesSemantics angularVelocityInv = angularVelocity.inverse(); TwistCoordinatesSemantics twistInv = twist.inverse(); TorqueCoordinatesSemantics torqueInv = torque.inverse(); ForceCoordinatesSemantics forceInv = force.inverse(); WrenchCoordinatesSemantics wrenchInv = wrench.inverse();

std::cout << "-----------------------------------------" << std::endl; std::cout << "Inverses: " << std::endl; std::cout << " " << positionInv << " is the inverse of " << position << std::endl; std::cout << " " << orientationInv << " is the inverse of " << orientation << std::endl; std::cout << " " << poseInv << " is the inverse of " << pose << std::endl; std::cout << " " << linearVelocityInv << " is the inverse of " << linearVelocity << std::endl; std::cout << " " << angularVelocityInv << " is the inverse of " << angularVelocity << std::endl; std::cout << " " << twistInv << " is the inverse of " << twist << std::endl; std::cout << " " << torqueInv << " is the inverse of " << torque << std::endl; std::cout << " " << forceInv << " is the inverse of " << force << std::endl; std::cout << " " << wrenchInv << " is the inverse of " << wrench << std::endl;

- Now we can for instance compose the result with their inverses. Mind that the order of composition does not matter, since this is automatically derived from the semantic information in the objects.